mirror of

https://github.com/cwinfo/powerdns-admin.git

synced 2025-09-18 16:22:29 +00:00

Update docker stuff and bug fixes

This commit is contained in:

112

.dockerignore

Normal file

112

.dockerignore

Normal file

@@ -0,0 +1,112 @@

|

|||||||

|

### OSX ###

|

||||||

|

*.DS_Store

|

||||||

|

.AppleDouble

|

||||||

|

.LSOverride

|

||||||

|

|

||||||

|

# Icon must end with two \r

|

||||||

|

Icon

|

||||||

|

|

||||||

|

# Thumbnails

|

||||||

|

._*

|

||||||

|

|

||||||

|

# Files that might appear in the root of a volume

|

||||||

|

.DocumentRevisions-V100

|

||||||

|

.fseventsd

|

||||||

|

.Spotlight-V100

|

||||||

|

.TemporaryItems

|

||||||

|

.Trashes

|

||||||

|

.VolumeIcon.icns

|

||||||

|

.com.apple.timemachine.donotpresent

|

||||||

|

|

||||||

|

# Directories potentially created on remote AFP share

|

||||||

|

.AppleDB

|

||||||

|

.AppleDesktop

|

||||||

|

Network Trash Folder

|

||||||

|

Temporary Items

|

||||||

|

.apdisk

|

||||||

|

|

||||||

|

### Python ###

|

||||||

|

# Byte-compiled / optimized / DLL files

|

||||||

|

__pycache__/

|

||||||

|

*.py[cod]

|

||||||

|

*$py.class

|

||||||

|

|

||||||

|

# C extensions

|

||||||

|

*.so

|

||||||

|

|

||||||

|

# Distribution / packaging

|

||||||

|

.Python

|

||||||

|

build/

|

||||||

|

develop-eggs/

|

||||||

|

dist/

|

||||||

|

downloads/

|

||||||

|

eggs/

|

||||||

|

.eggs/

|

||||||

|

lib/

|

||||||

|

lib64/

|

||||||

|

parts/

|

||||||

|

sdist/

|

||||||

|

var/

|

||||||

|

wheels/

|

||||||

|

*.egg-info/

|

||||||

|

.installed.cfg

|

||||||

|

*.egg

|

||||||

|

|

||||||

|

# PyInstaller

|

||||||

|

# Usually these files are written by a python script from a template

|

||||||

|

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

||||||

|

*.manifest

|

||||||

|

*.spec

|

||||||

|

|

||||||

|

# Installer logs

|

||||||

|

pip-log.txt

|

||||||

|

pip-delete-this-directory.txt

|

||||||

|

|

||||||

|

# Unit test / coverage reports

|

||||||

|

htmlcov/

|

||||||

|

.tox/

|

||||||

|

.coverage

|

||||||

|

.coverage.*

|

||||||

|

.cache

|

||||||

|

.pytest_cache/

|

||||||

|

nosetests.xml

|

||||||

|

coverage.xml

|

||||||

|

*.cover

|

||||||

|

.hypothesis/

|

||||||

|

|

||||||

|

# Translations

|

||||||

|

*.mo

|

||||||

|

*.pot

|

||||||

|

|

||||||

|

# Flask stuff:

|

||||||

|

flask/

|

||||||

|

instance/settings.py

|

||||||

|

.webassets-cache

|

||||||

|

|

||||||

|

# Scrapy stuff:

|

||||||

|

.scrapy

|

||||||

|

|

||||||

|

# celery beat schedule file

|

||||||

|

celerybeat-schedule.*

|

||||||

|

|

||||||

|

# Node

|

||||||

|

node_modules

|

||||||

|

npm-debug.log

|

||||||

|

|

||||||

|

# Docker

|

||||||

|

Dockerfile*

|

||||||

|

docker-compose*

|

||||||

|

.dockerignore

|

||||||

|

|

||||||

|

# Git

|

||||||

|

.git

|

||||||

|

.gitattributes

|

||||||

|

.gitignore

|

||||||

|

|

||||||

|

# Vscode

|

||||||

|

.vscode

|

||||||

|

*.code-workspace

|

||||||

|

|

||||||

|

# Others

|

||||||

|

.lgtm.yml

|

||||||

|

.travis.yml

|

||||||

16

.env

16

.env

@@ -1,16 +0,0 @@

|

|||||||

ENVIRONMENT=development

|

|

||||||

|

|

||||||

PDA_DB_HOST=powerdns-admin-mysql

|

|

||||||

PDA_DB_NAME=powerdns_admin

|

|

||||||

PDA_DB_USER=powerdns_admin

|

|

||||||

PDA_DB_PASSWORD=changeme

|

|

||||||

PDA_DB_PORT=3306

|

|

||||||

|

|

||||||

PDNS_DB_HOST=pdns-mysql

|

|

||||||

PDNS_DB_NAME=pdns

|

|

||||||

PDNS_DB_USER=pdns

|

|

||||||

PDNS_DB_PASSWORD=changeme

|

|

||||||

|

|

||||||

PDNS_HOST=pdns-server

|

|

||||||

PDNS_API_KEY=changeme

|

|

||||||

PDNS_WEBSERVER_ALLOW_FROM=0.0.0.0

|

|

||||||

17

.gitignore

vendored

17

.gitignore

vendored

@@ -25,22 +25,17 @@ nosetests.xml

|

|||||||

|

|

||||||

flask

|

flask

|

||||||

config.py

|

config.py

|

||||||

|

configs/production.py

|

||||||

logfile.log

|

logfile.log

|

||||||

settings.json

|

|

||||||

advanced_settings.json

|

|

||||||

idp.crt

|

|

||||||

log.txt

|

log.txt

|

||||||

|

|

||||||

db_repository/*

|

|

||||||

upload/avatar/*

|

|

||||||

tmp/*

|

|

||||||

.ropeproject

|

|

||||||

.sonarlint/*

|

|

||||||

pdns.db

|

pdns.db

|

||||||

|

idp.crt

|

||||||

|

*.bak

|

||||||

|

db_repository/*

|

||||||

|

tmp/*

|

||||||

|

|

||||||

node_modules

|

node_modules

|

||||||

|

|

||||||

.webassets-cache

|

|

||||||

app/static/generated

|

app/static/generated

|

||||||

|

.webassets-cache

|

||||||

.venv*

|

.venv*

|

||||||

.pytest_cache

|

.pytest_cache

|

||||||

|

|||||||

2

.yarnrc

2

.yarnrc

@@ -1 +1 @@

|

|||||||

--*.modules-folder "./app/static/node_modules"

|

--*.modules-folder "./powerdnsadmin/static/node_modules"

|

||||||

|

|||||||

145

README.md

145

README.md

@@ -38,144 +38,11 @@ You can now access PowerDNS-Admin at url http://localhost:9191

|

|||||||

|

|

||||||

**NOTE:** For other methods to run PowerDNS-Admin, please take look at WIKI pages.

|

**NOTE:** For other methods to run PowerDNS-Admin, please take look at WIKI pages.

|

||||||

|

|

||||||

|

## Build production docker container image

|

||||||

|

|

||||||

|

```

|

||||||

|

$ docker build -t powerdns-admin:latest -f docker/Production/Dockerfile .

|

||||||

|

```

|

||||||

|

|

||||||

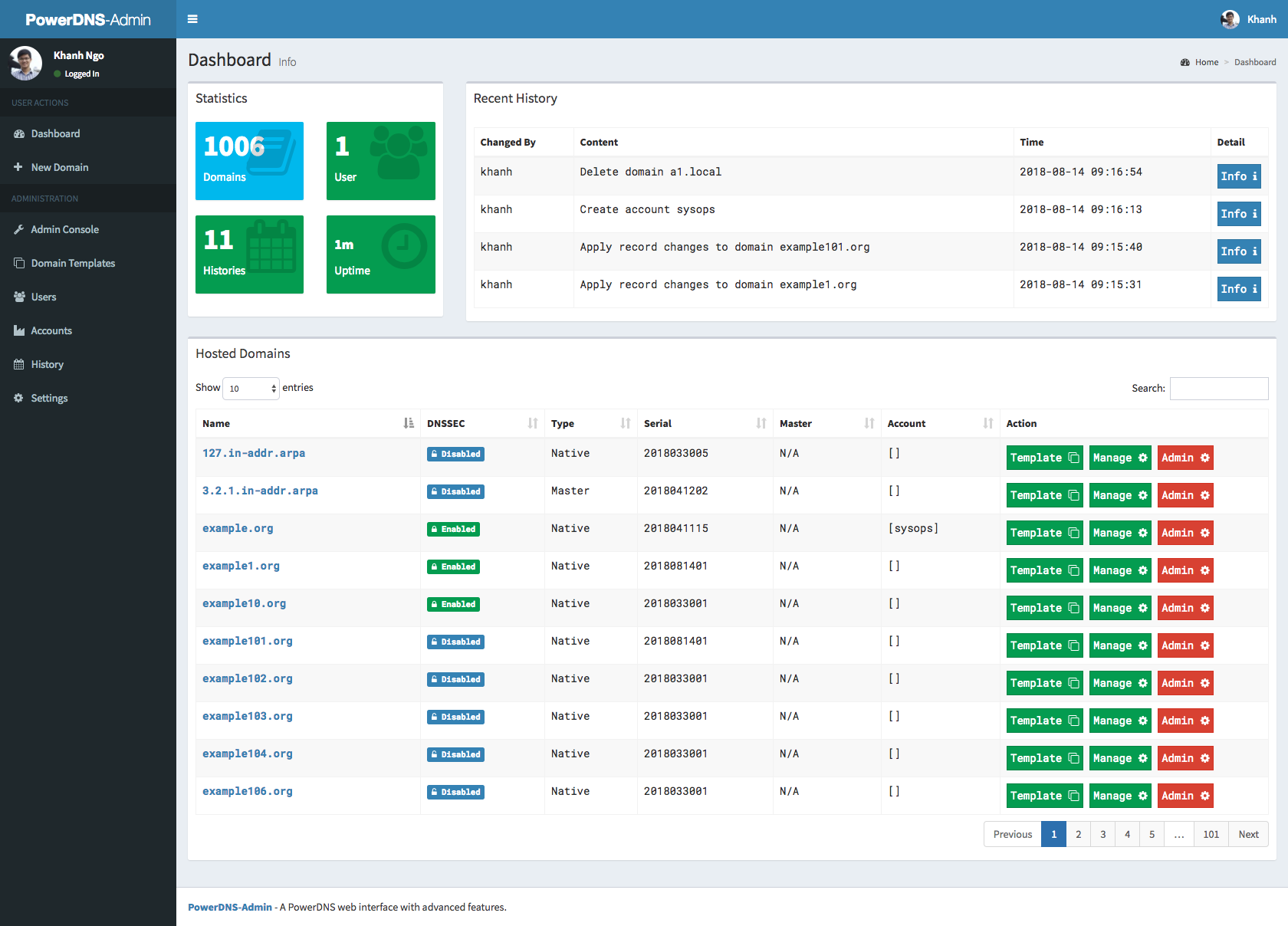

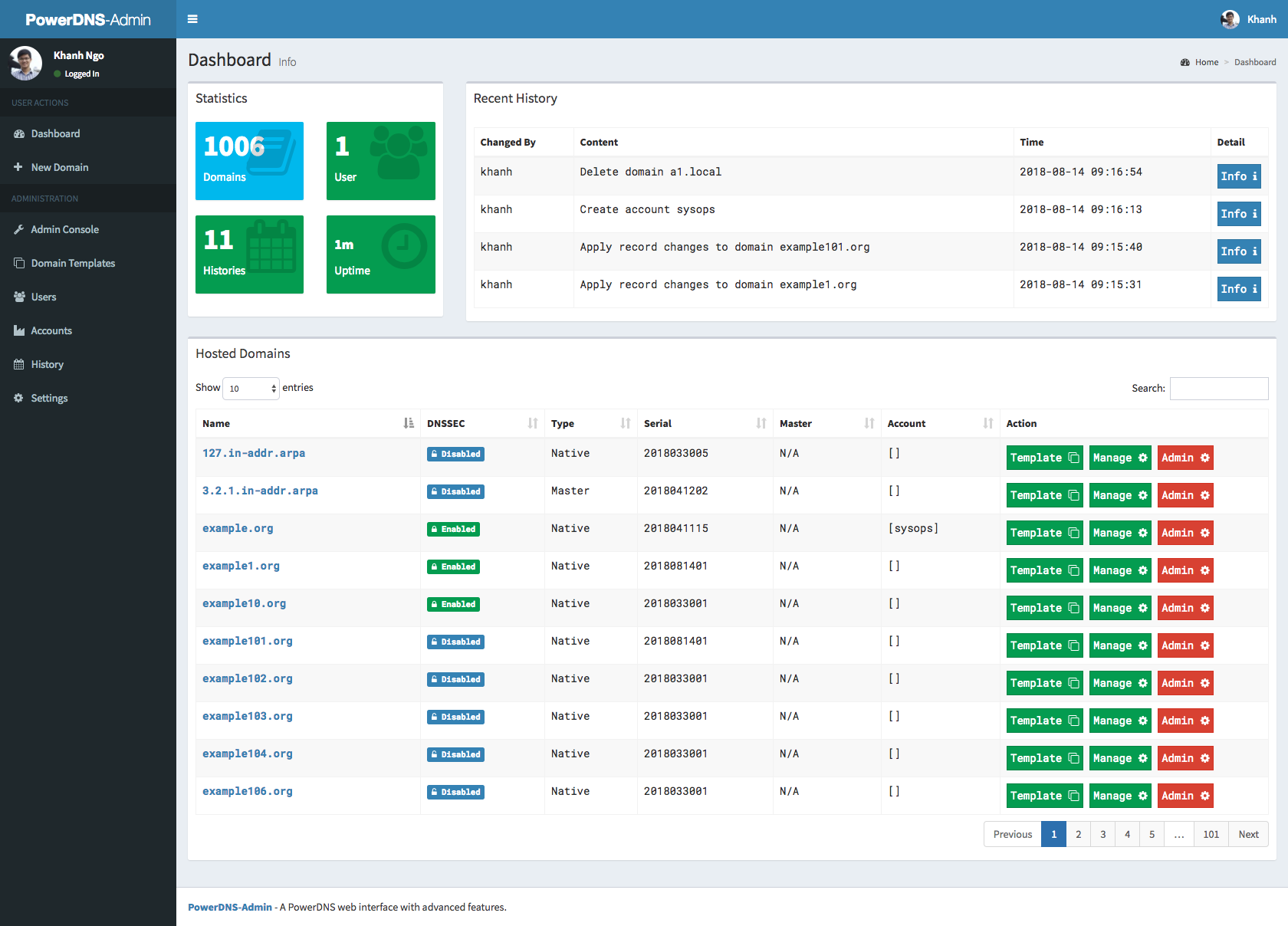

### Screenshots

|

### Screenshots

|

||||||

|

|

||||||

|

|

||||||

### Running tests

|

|

||||||

|

|

||||||

**NOTE:** Tests will create `__pycache__` folders which will be owned by root, which might be issue during rebuild

|

|

||||||

|

|

||||||

thus (e.g. invalid tar headers message) when such situation occurs, you need to remove those folders as root

|

|

||||||

|

|

||||||

1. Build images

|

|

||||||

|

|

||||||

```

|

|

||||||

docker-compose -f docker-compose-test.yml build

|

|

||||||

```

|

|

||||||

|

|

||||||

2. Run tests

|

|

||||||

|

|

||||||

```

|

|

||||||

docker-compose -f docker-compose-test.yml up

|

|

||||||

```

|

|

||||||

|

|

||||||

3. Rerun tests

|

|

||||||

|

|

||||||

```

|

|

||||||

docker-compose -f docker-compose-test.yml down

|

|

||||||

```

|

|

||||||

|

|

||||||

To teardown previous environment

|

|

||||||

|

|

||||||

```

|

|

||||||

docker-compose -f docker-compose-test.yml up

|

|

||||||

```

|

|

||||||

|

|

||||||

To run tests again

|

|

||||||

|

|

||||||

### API Usage

|

|

||||||

|

|

||||||

1. run docker image docker-compose up, go to UI http://localhost:9191, at http://localhost:9191/swagger is swagger API specification

|

|

||||||

2. click to register user, type e.g. user: admin and password: admin

|

|

||||||

3. login to UI in settings enable allow domain creation for users,

|

|

||||||

now you can create and manage domains with admin account and also ordinary users

|

|

||||||

4. Encode your user and password to base64, in our example we have user admin and password admin so in linux cmd line we type:

|

|

||||||

|

|

||||||

```

|

|

||||||

someuser@somehost:~$echo -n 'admin:admin'|base64

|

|

||||||

YWRtaW46YWRtaW4=

|

|

||||||

```

|

|

||||||

|

|

||||||

we use generated output in basic authentication, we authenticate as user,

|

|

||||||

with basic authentication, we can create/delete/get zone and create/delete/get/update apikeys

|

|

||||||

|

|

||||||

creating domain:

|

|

||||||

|

|

||||||

```

|

|

||||||

curl -L -vvv -H 'Content-Type: application/json' -H 'Authorization: Basic YWRtaW46YWRtaW4=' -X POST http://localhost:9191/api/v1/pdnsadmin/zones --data '{"name": "yourdomain.com.", "kind": "NATIVE", "nameservers": ["ns1.mydomain.com."]}'

|

|

||||||

```

|

|

||||||

|

|

||||||

creating apikey which has Administrator role, apikey can have also User role, when creating such apikey you have to specify also domain for which apikey is valid:

|

|

||||||

|

|

||||||

```

|

|

||||||

curl -L -vvv -H 'Content-Type: application/json' -H 'Authorization: Basic YWRtaW46YWRtaW4=' -X POST http://localhost:9191/api/v1/pdnsadmin/apikeys --data '{"description": "masterkey","domains":[], "role": "Administrator"}'

|

|

||||||

```

|

|

||||||

|

|

||||||

call above will return response like this:

|

|

||||||

|

|

||||||

```

|

|

||||||

[{"description": "samekey", "domains": [], "role": {"name": "Administrator", "id": 1}, "id": 2, "plain_key": "aGCthP3KLAeyjZI"}]

|

|

||||||

```

|

|

||||||

|

|

||||||

we take plain_key and base64 encode it, this is the only time we can get API key in plain text and save it somewhere:

|

|

||||||

|

|

||||||

```

|

|

||||||

someuser@somehost:~$echo -n 'aGCthP3KLAeyjZI'|base64

|

|

||||||

YUdDdGhQM0tMQWV5alpJ

|

|

||||||

```

|

|

||||||

|

|

||||||

We can use apikey for all calls specified in our API specification (it tries to follow powerdns API 1:1, only tsigkeys endpoints are not yet implemented), don't forget to specify Content-Type!

|

|

||||||

|

|

||||||

getting powerdns configuration:

|

|

||||||

|

|

||||||

```

|

|

||||||

curl -L -vvv -H 'Content-Type: application/json' -H 'X-API-KEY: YUdDdGhQM0tMQWV5alpJ' -X GET http://localhost:9191/api/v1/servers/localhost/config

|

|

||||||

```

|

|

||||||

|

|

||||||

creating and updating records:

|

|

||||||

|

|

||||||

```

|

|

||||||

curl -X PATCH -H 'Content-Type: application/json' --data '{"rrsets": [{"name": "test1.yourdomain.com.","type": "A","ttl": 86400,"changetype": "REPLACE","records": [ {"content": "192.0.2.5", "disabled": false} ]},{"name": "test2.yourdomain.com.","type": "AAAA","ttl": 86400,"changetype": "REPLACE","records": [ {"content": "2001:db8::6", "disabled": false} ]}]}' -H 'X-API-Key: YUdDdGhQM0tMQWV5alpJ' http://127.0.0.1:9191/api/v1/servers/localhost/zones/yourdomain.com.

|

|

||||||

```

|

|

||||||

|

|

||||||

getting domain:

|

|

||||||

|

|

||||||

```

|

|

||||||

curl -L -vvv -H 'Content-Type: application/json' -H 'X-API-KEY: YUdDdGhQM0tMQWV5alpJ' -X GET http://localhost:9191/api/v1/servers/localhost/zones/yourdomain.com

|

|

||||||

```

|

|

||||||

|

|

||||||

list zone records:

|

|

||||||

|

|

||||||

```

|

|

||||||

curl -H 'Content-Type: application/json' -H 'X-API-Key: YUdDdGhQM0tMQWV5alpJ' http://localhost:9191/api/v1/servers/localhost/zones/yourdomain.com

|

|

||||||

```

|

|

||||||

|

|

||||||

add new record:

|

|

||||||

|

|

||||||

```

|

|

||||||

curl -H 'Content-Type: application/json' -X PATCH --data '{"rrsets": [ {"name": "test.yourdomain.com.", "type": "A", "ttl": 86400, "changetype": "REPLACE", "records": [ {"content": "192.0.5.4", "disabled": false } ] } ] }' -H 'X-API-Key: YUdDdGhQM0tMQWV5alpJ' http://localhost:9191/api/v1/servers/localhost/zones/yourdomain.com | jq .

|

|

||||||

```

|

|

||||||

|

|

||||||

update record:

|

|

||||||

|

|

||||||

```

|

|

||||||

curl -H 'Content-Type: application/json' -X PATCH --data '{"rrsets": [ {"name": "test.yourdomain.com.", "type": "A", "ttl": 86400, "changetype": "REPLACE", "records": [ {"content": "192.0.2.5", "disabled": false, "name": "test.yourdomain.com.", "ttl": 86400, "type": "A"}]}]}' -H 'X-API-Key: YUdDdGhQM0tMQWV5alpJ' http://localhost:9191/api/v1/servers/localhost/zones/yourdomain.com | jq .

|

|

||||||

```

|

|

||||||

|

|

||||||

delete record:

|

|

||||||

|

|

||||||

```

|

|

||||||

curl -H 'Content-Type: application/json' -X PATCH --data '{"rrsets": [ {"name": "test.yourdomain.com.", "type": "A", "ttl": 86400, "changetype": "DELETE"}]}' -H 'X-API-Key: YUdDdGhQM0tMQWV5alpJ' http://localhost:9191/api/v1/servers/localhost/zones/yourdomain.com | jq

|

|

||||||

```

|

|

||||||

|

|

||||||

### Generate ER diagram

|

|

||||||

|

|

||||||

```

|

|

||||||

apt-get install python-dev graphviz libgraphviz-dev pkg-config

|

|

||||||

```

|

|

||||||

|

|

||||||

```

|

|

||||||

pip install graphviz mysqlclient ERAlchemy

|

|

||||||

```

|

|

||||||

|

|

||||||

```

|

|

||||||

docker-compose up -d

|

|

||||||

```

|

|

||||||

|

|

||||||

```

|

|

||||||

source .env

|

|

||||||

```

|

|

||||||

|

|

||||||

```

|

|

||||||

eralchemy -i 'mysql://${PDA_DB_USER}:${PDA_DB_PASSWORD}@'$(docker inspect powerdns-admin-mysql|jq -jr '.[0].NetworkSettings.Networks.powerdnsadmin_default.IPAddress')':3306/powerdns_admin' -o /tmp/output.pdf

|

|

||||||

```

|

|

||||||

|

|||||||

@@ -1,133 +0,0 @@

|

|||||||

import os

|

|

||||||

basedir = os.path.abspath(os.path.dirname(__file__))

|

|

||||||

|

|

||||||

# BASIC APP CONFIG

|

|

||||||

SECRET_KEY = 'We are the world'

|

|

||||||

BIND_ADDRESS = '127.0.0.1'

|

|

||||||

PORT = 9191

|

|

||||||

|

|

||||||

# TIMEOUT - for large zones

|

|

||||||

TIMEOUT = 10

|

|

||||||

|

|

||||||

# LOG CONFIG

|

|

||||||

# - For docker, LOG_FILE=''

|

|

||||||

LOG_LEVEL = 'DEBUG'

|

|

||||||

LOG_FILE = 'logfile.log'

|

|

||||||

SALT = '$2b$12$yLUMTIfl21FKJQpTkRQXCu'

|

|

||||||

|

|

||||||

# UPLOAD DIRECTORY

|

|

||||||

UPLOAD_DIR = os.path.join(basedir, 'upload')

|

|

||||||

|

|

||||||

# DATABASE CONFIG

|

|

||||||

SQLA_DB_USER = 'pda'

|

|

||||||

SQLA_DB_PASSWORD = 'changeme'

|

|

||||||

SQLA_DB_HOST = '127.0.0.1'

|

|

||||||

SQLA_DB_PORT = 3306

|

|

||||||

SQLA_DB_NAME = 'pda'

|

|

||||||

SQLALCHEMY_TRACK_MODIFICATIONS = True

|

|

||||||

|

|

||||||

# DATABASE - MySQL

|

|

||||||

SQLALCHEMY_DATABASE_URI = 'mysql://'+SQLA_DB_USER+':'+SQLA_DB_PASSWORD+'@'+SQLA_DB_HOST+':'+str(SQLA_DB_PORT)+'/'+SQLA_DB_NAME

|

|

||||||

|

|

||||||

# DATABASE - SQLite

|

|

||||||

# SQLALCHEMY_DATABASE_URI = 'sqlite:///' + os.path.join(basedir, 'pdns.db')

|

|

||||||

|

|

||||||

# SAML Authentication

|

|

||||||

SAML_ENABLED = False

|

|

||||||

SAML_DEBUG = True

|

|

||||||

SAML_PATH = os.path.join(os.path.dirname(__file__), 'saml')

|

|

||||||

##Example for ADFS Metadata-URL

|

|

||||||

SAML_METADATA_URL = 'https://<hostname>/FederationMetadata/2007-06/FederationMetadata.xml'

|

|

||||||

#Cache Lifetime in Seconds

|

|

||||||

SAML_METADATA_CACHE_LIFETIME = 1

|

|

||||||

|

|

||||||

# SAML SSO binding format to use

|

|

||||||

## Default: library default (urn:oasis:names:tc:SAML:2.0:bindings:HTTP-Redirect)

|

|

||||||

#SAML_IDP_SSO_BINDING = 'urn:oasis:names:tc:SAML:2.0:bindings:HTTP-POST'

|

|

||||||

|

|

||||||

## EntityID of the IdP to use. Only needed if more than one IdP is

|

|

||||||

## in the SAML_METADATA_URL

|

|

||||||

### Default: First (only) IdP in the SAML_METADATA_URL

|

|

||||||

### Example: https://idp.example.edu/idp

|

|

||||||

#SAML_IDP_ENTITY_ID = 'https://idp.example.edu/idp'

|

|

||||||

## NameID format to request

|

|

||||||

### Default: The SAML NameID Format in the metadata if present,

|

|

||||||

### otherwise urn:oasis:names:tc:SAML:1.1:nameid-format:unspecified

|

|

||||||

### Example: urn:oid:0.9.2342.19200300.100.1.1

|

|

||||||

#SAML_NAMEID_FORMAT = 'urn:oid:0.9.2342.19200300.100.1.1'

|

|

||||||

|

|

||||||

## Attribute to use for Email address

|

|

||||||

### Default: email

|

|

||||||

### Example: urn:oid:0.9.2342.19200300.100.1.3

|

|

||||||

#SAML_ATTRIBUTE_EMAIL = 'urn:oid:0.9.2342.19200300.100.1.3'

|

|

||||||

|

|

||||||

## Attribute to use for Given name

|

|

||||||

### Default: givenname

|

|

||||||

### Example: urn:oid:2.5.4.42

|

|

||||||

#SAML_ATTRIBUTE_GIVENNAME = 'urn:oid:2.5.4.42'

|

|

||||||

|

|

||||||

## Attribute to use for Surname

|

|

||||||

### Default: surname

|

|

||||||

### Example: urn:oid:2.5.4.4

|

|

||||||

#SAML_ATTRIBUTE_SURNAME = 'urn:oid:2.5.4.4'

|

|

||||||

|

|

||||||

## Split into Given name and Surname

|

|

||||||

## Useful if your IDP only gives a display name

|

|

||||||

### Default: none

|

|

||||||

### Example: http://schemas.microsoft.com/identity/claims/displayname

|

|

||||||

#SAML_ATTRIBUTE_NAME = 'http://schemas.microsoft.com/identity/claims/displayname'

|

|

||||||

|

|

||||||

## Attribute to use for username

|

|

||||||

### Default: Use NameID instead

|

|

||||||

### Example: urn:oid:0.9.2342.19200300.100.1.1

|

|

||||||

#SAML_ATTRIBUTE_USERNAME = 'urn:oid:0.9.2342.19200300.100.1.1'

|

|

||||||

|

|

||||||

## Attribute to get admin status from

|

|

||||||

### Default: Don't control admin with SAML attribute

|

|

||||||

### Example: https://example.edu/pdns-admin

|

|

||||||

### If set, look for the value 'true' to set a user as an administrator

|

|

||||||

### If not included in assertion, or set to something other than 'true',

|

|

||||||

### the user is set as a non-administrator user.

|

|

||||||

#SAML_ATTRIBUTE_ADMIN = 'https://example.edu/pdns-admin'

|

|

||||||

|

|

||||||

## Attribute to get group from

|

|

||||||

### Default: Don't use groups from SAML attribute

|

|

||||||

### Example: https://example.edu/pdns-admin-group

|

|

||||||

#SAML_ATTRIBUTE_GROUP = 'https://example.edu/pdns-admin'

|

|

||||||

|

|

||||||

## Group namem to get admin status from

|

|

||||||

### Default: Don't control admin with SAML group

|

|

||||||

### Example: https://example.edu/pdns-admin

|

|

||||||

#SAML_GROUP_ADMIN_NAME = 'powerdns-admin'

|

|

||||||

|

|

||||||

## Attribute to get group to account mappings from

|

|

||||||

### Default: None

|

|

||||||

### If set, the user will be added and removed from accounts to match

|

|

||||||

### what's in the login assertion if they are in the required group

|

|

||||||

#SAML_GROUP_TO_ACCOUNT_MAPPING = 'dev-admins=dev,prod-admins=prod'

|

|

||||||

|

|

||||||

## Attribute to get account names from

|

|

||||||

### Default: Don't control accounts with SAML attribute

|

|

||||||

### If set, the user will be added and removed from accounts to match

|

|

||||||

### what's in the login assertion. Accounts that don't exist will

|

|

||||||

### be created and the user added to them.

|

|

||||||

SAML_ATTRIBUTE_ACCOUNT = 'https://example.edu/pdns-account'

|

|

||||||

|

|

||||||

SAML_SP_ENTITY_ID = 'http://<SAML SP Entity ID>'

|

|

||||||

SAML_SP_CONTACT_NAME = '<contact name>'

|

|

||||||

SAML_SP_CONTACT_MAIL = '<contact mail>'

|

|

||||||

#Configures if SAML tokens should be encrypted.

|

|

||||||

#If enabled a new app certificate will be generated on restart

|

|

||||||

SAML_SIGN_REQUEST = False

|

|

||||||

|

|

||||||

# Configures if you want to request the IDP to sign the message

|

|

||||||

# Default is True

|

|

||||||

#SAML_WANT_MESSAGE_SIGNED = True

|

|

||||||

|

|

||||||

#Use SAML standard logout mechanism retrieved from idp metadata

|

|

||||||

#If configured false don't care about SAML session on logout.

|

|

||||||

#Logout from PowerDNS-Admin only and keep SAML session authenticated.

|

|

||||||

SAML_LOGOUT = False

|

|

||||||

#Configure to redirect to a different url then PowerDNS-Admin login after SAML logout

|

|

||||||

#for example redirect to google.com after successful saml logout

|

|

||||||

#SAML_LOGOUT_URL = 'https://google.com'

|

|

||||||

@@ -1,124 +1,94 @@

|

|||||||

import os

|

import os

|

||||||

basedir = os.path.abspath(os.path.dirname(__file__))

|

basedir = os.path.abspath(os.path.abspath(os.path.dirname(__file__)))

|

||||||

|

|

||||||

# BASIC APP CONFIG

|

### BASIC APP CONFIG

|

||||||

SECRET_KEY = 'changeme'

|

|

||||||

LOG_LEVEL = 'DEBUG'

|

|

||||||

LOG_FILE = os.path.join(basedir, 'logs/log.txt')

|

|

||||||

SALT = '$2b$12$yLUMTIfl21FKJQpTkRQXCu'

|

SALT = '$2b$12$yLUMTIfl21FKJQpTkRQXCu'

|

||||||

# TIMEOUT - for large zones

|

SECRET_KEY = 'e951e5a1f4b94151b360f47edf596dd2'

|

||||||

TIMEOUT = 10

|

BIND_ADDRESS = '0.0.0.0'

|

||||||

|

PORT = 9191

|

||||||

|

|

||||||

# UPLOAD DIR

|

### DATABASE CONFIG

|

||||||

UPLOAD_DIR = os.path.join(basedir, 'upload')

|

SQLA_DB_USER = 'pda'

|

||||||

|

SQLA_DB_PASSWORD = 'changeme'

|

||||||

# DATABASE CONFIG FOR MYSQL

|

SQLA_DB_HOST = '127.0.0.1'

|

||||||

DB_HOST = os.environ.get('PDA_DB_HOST')

|

SQLA_DB_NAME = 'pda'

|

||||||

DB_PORT = os.environ.get('PDA_DB_PORT', 3306 )

|

|

||||||

DB_NAME = os.environ.get('PDA_DB_NAME')

|

|

||||||

DB_USER = os.environ.get('PDA_DB_USER')

|

|

||||||

DB_PASSWORD = os.environ.get('PDA_DB_PASSWORD')

|

|

||||||

#MySQL

|

|

||||||

SQLALCHEMY_DATABASE_URI = 'mysql://'+DB_USER+':'+DB_PASSWORD+'@'+DB_HOST+':'+ str(DB_PORT) + '/'+DB_NAME

|

|

||||||

SQLALCHEMY_MIGRATE_REPO = os.path.join(basedir, 'db_repository')

|

|

||||||

SQLALCHEMY_TRACK_MODIFICATIONS = True

|

SQLALCHEMY_TRACK_MODIFICATIONS = True

|

||||||

|

|

||||||

# SAML Authentication

|

### DATBASE - MySQL

|

||||||

|

# SQLALCHEMY_DATABASE_URI = 'mysql://' + SQLA_DB_USER + ':' + SQLA_DB_PASSWORD + '@' + SQLA_DB_HOST + '/' + SQLA_DB_NAME

|

||||||

|

|

||||||

|

### DATABSE - SQLite

|

||||||

|

SQLALCHEMY_DATABASE_URI = 'sqlite:///' + os.path.join(basedir, 'pdns.db')

|

||||||

|

|

||||||

|

# SAML Authnetication

|

||||||

SAML_ENABLED = False

|

SAML_ENABLED = False

|

||||||

SAML_DEBUG = True

|

# SAML_DEBUG = True

|

||||||

SAML_PATH = os.path.join(os.path.dirname(__file__), 'saml')

|

# SAML_PATH = os.path.join(os.path.dirname(__file__), 'saml')

|

||||||

##Example for ADFS Metadata-URL

|

# ##Example for ADFS Metadata-URL

|

||||||

SAML_METADATA_URL = 'https://<hostname>/FederationMetadata/2007-06/FederationMetadata.xml'

|

# SAML_METADATA_URL = 'https://<hostname>/FederationMetadata/2007-06/FederationMetadata.xml'

|

||||||

#Cache Lifetime in Seconds

|

# #Cache Lifetime in Seconds

|

||||||

SAML_METADATA_CACHE_LIFETIME = 1

|

# SAML_METADATA_CACHE_LIFETIME = 1

|

||||||

|

|

||||||

# SAML SSO binding format to use

|

# # SAML SSO binding format to use

|

||||||

## Default: library default (urn:oasis:names:tc:SAML:2.0:bindings:HTTP-Redirect)

|

# ## Default: library default (urn:oasis:names:tc:SAML:2.0:bindings:HTTP-Redirect)

|

||||||

#SAML_IDP_SSO_BINDING = 'urn:oasis:names:tc:SAML:2.0:bindings:HTTP-POST'

|

# #SAML_IDP_SSO_BINDING = 'urn:oasis:names:tc:SAML:2.0:bindings:HTTP-POST'

|

||||||

|

|

||||||

## EntityID of the IdP to use. Only needed if more than one IdP is

|

# ## EntityID of the IdP to use. Only needed if more than one IdP is

|

||||||

## in the SAML_METADATA_URL

|

# ## in the SAML_METADATA_URL

|

||||||

### Default: First (only) IdP in the SAML_METADATA_URL

|

# ### Default: First (only) IdP in the SAML_METADATA_URL

|

||||||

### Example: https://idp.example.edu/idp

|

# ### Example: https://idp.example.edu/idp

|

||||||

#SAML_IDP_ENTITY_ID = 'https://idp.example.edu/idp'

|

# #SAML_IDP_ENTITY_ID = 'https://idp.example.edu/idp'

|

||||||

## NameID format to request

|

# ## NameID format to request

|

||||||

### Default: The SAML NameID Format in the metadata if present,

|

# ### Default: The SAML NameID Format in the metadata if present,

|

||||||

### otherwise urn:oasis:names:tc:SAML:1.1:nameid-format:unspecified

|

# ### otherwise urn:oasis:names:tc:SAML:1.1:nameid-format:unspecified

|

||||||

### Example: urn:oid:0.9.2342.19200300.100.1.1

|

# ### Example: urn:oid:0.9.2342.19200300.100.1.1

|

||||||

#SAML_NAMEID_FORMAT = 'urn:oid:0.9.2342.19200300.100.1.1'

|

# #SAML_NAMEID_FORMAT = 'urn:oid:0.9.2342.19200300.100.1.1'

|

||||||

|

|

||||||

## Attribute to use for Email address

|

# ## Attribute to use for Email address

|

||||||

### Default: email

|

# ### Default: email

|

||||||

### Example: urn:oid:0.9.2342.19200300.100.1.3

|

# ### Example: urn:oid:0.9.2342.19200300.100.1.3

|

||||||

#SAML_ATTRIBUTE_EMAIL = 'urn:oid:0.9.2342.19200300.100.1.3'

|

# #SAML_ATTRIBUTE_EMAIL = 'urn:oid:0.9.2342.19200300.100.1.3'

|

||||||

|

|

||||||

## Attribute to use for Given name

|

# ## Attribute to use for Given name

|

||||||

### Default: givenname

|

# ### Default: givenname

|

||||||

### Example: urn:oid:2.5.4.42

|

# ### Example: urn:oid:2.5.4.42

|

||||||

#SAML_ATTRIBUTE_GIVENNAME = 'urn:oid:2.5.4.42'

|

# #SAML_ATTRIBUTE_GIVENNAME = 'urn:oid:2.5.4.42'

|

||||||

|

|

||||||

## Attribute to use for Surname

|

# ## Attribute to use for Surname

|

||||||

### Default: surname

|

# ### Default: surname

|

||||||

### Example: urn:oid:2.5.4.4

|

# ### Example: urn:oid:2.5.4.4

|

||||||

#SAML_ATTRIBUTE_SURNAME = 'urn:oid:2.5.4.4'

|

# #SAML_ATTRIBUTE_SURNAME = 'urn:oid:2.5.4.4'

|

||||||

|

|

||||||

## Split into Given name and Surname

|

# ## Attribute to use for username

|

||||||

## Useful if your IDP only gives a display name

|

# ### Default: Use NameID instead

|

||||||

### Default: none

|

# ### Example: urn:oid:0.9.2342.19200300.100.1.1

|

||||||

### Example: http://schemas.microsoft.com/identity/claims/displayname

|

# #SAML_ATTRIBUTE_USERNAME = 'urn:oid:0.9.2342.19200300.100.1.1'

|

||||||

#SAML_ATTRIBUTE_NAME = 'http://schemas.microsoft.com/identity/claims/displayname'

|

|

||||||

|

|

||||||

## Attribute to use for username

|

# ## Attribute to get admin status from

|

||||||

### Default: Use NameID instead

|

# ### Default: Don't control admin with SAML attribute

|

||||||

### Example: urn:oid:0.9.2342.19200300.100.1.1

|

# ### Example: https://example.edu/pdns-admin

|

||||||

#SAML_ATTRIBUTE_USERNAME = 'urn:oid:0.9.2342.19200300.100.1.1'

|

# ### If set, look for the value 'true' to set a user as an administrator

|

||||||

|

# ### If not included in assertion, or set to something other than 'true',

|

||||||

|

# ### the user is set as a non-administrator user.

|

||||||

|

# #SAML_ATTRIBUTE_ADMIN = 'https://example.edu/pdns-admin'

|

||||||

|

|

||||||

## Attribute to get admin status from

|

# ## Attribute to get account names from

|

||||||

### Default: Don't control admin with SAML attribute

|

# ### Default: Don't control accounts with SAML attribute

|

||||||

### Example: https://example.edu/pdns-admin

|

# ### If set, the user will be added and removed from accounts to match

|

||||||

### If set, look for the value 'true' to set a user as an administrator

|

# ### what's in the login assertion. Accounts that don't exist will

|

||||||

### If not included in assertion, or set to something other than 'true',

|

# ### be created and the user added to them.

|

||||||

### the user is set as a non-administrator user.

|

# SAML_ATTRIBUTE_ACCOUNT = 'https://example.edu/pdns-account'

|

||||||

#SAML_ATTRIBUTE_ADMIN = 'https://example.edu/pdns-admin'

|

|

||||||

|

|

||||||

## Attribute to get group from

|

# SAML_SP_ENTITY_ID = 'http://<SAML SP Entity ID>'

|

||||||

### Default: Don't use groups from SAML attribute

|

# SAML_SP_CONTACT_NAME = '<contact name>'

|

||||||

### Example: https://example.edu/pdns-admin-group

|

# SAML_SP_CONTACT_MAIL = '<contact mail>'

|

||||||

#SAML_ATTRIBUTE_GROUP = 'https://example.edu/pdns-admin'

|

# #Cofigures if SAML tokens should be encrypted.

|

||||||

|

# #If enabled a new app certificate will be generated on restart

|

||||||

## Group namem to get admin status from

|

# SAML_SIGN_REQUEST = False

|

||||||

### Default: Don't control admin with SAML group

|

# #Use SAML standard logout mechanism retreived from idp metadata

|

||||||

### Example: https://example.edu/pdns-admin

|

# #If configured false don't care about SAML session on logout.

|

||||||

#SAML_GROUP_ADMIN_NAME = 'powerdns-admin'

|

# #Logout from PowerDNS-Admin only and keep SAML session authenticated.

|

||||||

|

# SAML_LOGOUT = False

|

||||||

## Attribute to get group to account mappings from

|

# #Configure to redirect to a different url then PowerDNS-Admin login after SAML logout

|

||||||

### Default: None

|

# #for example redirect to google.com after successful saml logout

|

||||||

### If set, the user will be added and removed from accounts to match

|

# #SAML_LOGOUT_URL = 'https://google.com'

|

||||||

### what's in the login assertion if they are in the required group

|

|

||||||

#SAML_GROUP_TO_ACCOUNT_MAPPING = 'dev-admins=dev,prod-admins=prod'

|

|

||||||

|

|

||||||

## Attribute to get account names from

|

|

||||||

### Default: Don't control accounts with SAML attribute

|

|

||||||

### If set, the user will be added and removed from accounts to match

|

|

||||||

### what's in the login assertion. Accounts that don't exist will

|

|

||||||

### be created and the user added to them.

|

|

||||||

SAML_ATTRIBUTE_ACCOUNT = 'https://example.edu/pdns-account'

|

|

||||||

|

|

||||||

SAML_SP_ENTITY_ID = 'http://<SAML SP Entity ID>'

|

|

||||||

SAML_SP_CONTACT_NAME = '<contact name>'

|

|

||||||

SAML_SP_CONTACT_MAIL = '<contact mail>'

|

|

||||||

#Configures if SAML tokens should be encrypted.

|

|

||||||

#If enabled a new app certificate will be generated on restart

|

|

||||||

SAML_SIGN_REQUEST = False

|

|

||||||

|

|

||||||

# Configures if you want to request the IDP to sign the message

|

|

||||||

# Default is True

|

|

||||||

#SAML_WANT_MESSAGE_SIGNED = True

|

|

||||||

|

|

||||||

#Use SAML standard logout mechanism retrieved from idp metadata

|

|

||||||

#If configured false don't care about SAML session on logout.

|

|

||||||

#Logout from PowerDNS-Admin only and keep SAML session authenticated.

|

|

||||||

SAML_LOGOUT = False

|

|

||||||

#Configure to redirect to a different url then PowerDNS-Admin login after SAML logout

|

|

||||||

#for example redirect to google.com after successful saml logout

|

|

||||||

#SAML_LOGOUT_URL = 'https://google.com'

|

|

||||||

|

|||||||

@@ -1,4 +1,4 @@

|

|||||||

# defaults for Docker image

|

# Defaults for Docker image

|

||||||

BIND_ADDRESS='0.0.0.0'

|

BIND_ADDRESS='0.0.0.0'

|

||||||

PORT=80

|

PORT=80

|

||||||

|

|

||||||

@@ -6,11 +6,8 @@ legal_envvars = (

|

|||||||

'SECRET_KEY',

|

'SECRET_KEY',

|

||||||

'BIND_ADDRESS',

|

'BIND_ADDRESS',

|

||||||

'PORT',

|

'PORT',

|

||||||

'TIMEOUT',

|

|

||||||

'LOG_LEVEL',

|

'LOG_LEVEL',

|

||||||

'LOG_FILE',

|

|

||||||

'SALT',

|

'SALT',

|

||||||

'UPLOAD_DIR',

|

|

||||||

'SQLALCHEMY_TRACK_MODIFICATIONS',

|

'SQLALCHEMY_TRACK_MODIFICATIONS',

|

||||||

'SQLALCHEMY_DATABASE_URI',

|

'SQLALCHEMY_DATABASE_URI',

|

||||||

'SAML_ENABLED',

|

'SAML_ENABLED',

|

||||||

@@ -42,12 +39,12 @@ legal_envvars = (

|

|||||||

|

|

||||||

legal_envvars_int = (

|

legal_envvars_int = (

|

||||||

'PORT',

|

'PORT',

|

||||||

'TIMEOUT',

|

|

||||||

'SAML_METADATA_CACHE_LIFETIME',

|

'SAML_METADATA_CACHE_LIFETIME',

|

||||||

)

|

)

|

||||||

|

|

||||||

legal_envvars_bool = (

|

legal_envvars_bool = (

|

||||||

'SQLALCHEMY_TRACK_MODIFICATIONS',

|

'SQLALCHEMY_TRACK_MODIFICATIONS',

|

||||||

|

'HSTS_ENABLED',

|

||||||

'SAML_ENABLED',

|

'SAML_ENABLED',

|

||||||

'SAML_DEBUG',

|

'SAML_DEBUG',

|

||||||

'SAML_SIGN_REQUEST',

|

'SAML_SIGN_REQUEST',

|

||||||

@@ -66,5 +63,3 @@ for v in legal_envvars:

|

|||||||

if v in legal_envvars_int:

|

if v in legal_envvars_int:

|

||||||

ret = int(ret)

|

ret = int(ret)

|

||||||

sys.modules[__name__].__dict__[v] = ret

|

sys.modules[__name__].__dict__[v] = ret

|

||||||

|

|

||||||

|

|

||||||

131

configs/test.py

131

configs/test.py

@@ -1,131 +0,0 @@

|

|||||||

import os

|

|

||||||

basedir = os.path.abspath(os.path.dirname(__file__))

|

|

||||||

|

|

||||||

# BASIC APP CONFIG

|

|

||||||

SECRET_KEY = 'changeme'

|

|

||||||

LOG_LEVEL = 'DEBUG'

|

|

||||||

LOG_FILE = os.path.join(basedir, 'logs/log.txt')

|

|

||||||

SALT = '$2b$12$yLUMTIfl21FKJQpTkRQXCu'

|

|

||||||

# TIMEOUT - for large zones

|

|

||||||

TIMEOUT = 10

|

|

||||||

|

|

||||||

# UPLOAD DIR

|

|

||||||

UPLOAD_DIR = os.path.join(basedir, 'upload')

|

|

||||||

TEST_USER_PASSWORD = 'test'

|

|

||||||

TEST_USER = 'test'

|

|

||||||

TEST_ADMIN_USER = 'admin'

|

|

||||||

TEST_ADMIN_PASSWORD = 'admin'

|

|

||||||

TEST_USER_APIKEY = 'wewdsfewrfsfsdf'

|

|

||||||

TEST_ADMIN_APIKEY = 'nghnbnhtghrtert'

|

|

||||||

# DATABASE CONFIG FOR MYSQL

|

|

||||||

# DB_HOST = os.environ.get('PDA_DB_HOST')

|

|

||||||

# DB_PORT = os.environ.get('PDA_DB_PORT', 3306 )

|

|

||||||

# DB_NAME = os.environ.get('PDA_DB_NAME')

|

|

||||||

# DB_USER = os.environ.get('PDA_DB_USER')

|

|

||||||

# DB_PASSWORD = os.environ.get('PDA_DB_PASSWORD')

|

|

||||||

# #MySQL

|

|

||||||

# SQLALCHEMY_DATABASE_URI = 'mysql://'+DB_USER+':'+DB_PASSWORD+'@'+DB_HOST+':'+ str(DB_PORT) + '/'+DB_NAME

|

|

||||||

# SQLALCHEMY_MIGRATE_REPO = os.path.join(basedir, 'db_repository')

|

|

||||||

TEST_DB_LOCATION = '/tmp/testing.sqlite'

|

|

||||||

SQLALCHEMY_DATABASE_URI = 'sqlite:///{0}'.format(TEST_DB_LOCATION)

|

|

||||||

SQLALCHEMY_TRACK_MODIFICATIONS = False

|

|

||||||

|

|

||||||

# SAML Authentication

|

|

||||||

SAML_ENABLED = False

|

|

||||||

SAML_DEBUG = True

|

|

||||||

SAML_PATH = os.path.join(os.path.dirname(__file__), 'saml')

|

|

||||||

##Example for ADFS Metadata-URL

|

|

||||||

SAML_METADATA_URL = 'https://<hostname>/FederationMetadata/2007-06/FederationMetadata.xml'

|

|

||||||

#Cache Lifetime in Seconds

|

|

||||||

SAML_METADATA_CACHE_LIFETIME = 1

|

|

||||||

|

|

||||||

# SAML SSO binding format to use

|

|

||||||

## Default: library default (urn:oasis:names:tc:SAML:2.0:bindings:HTTP-Redirect)

|

|

||||||

#SAML_IDP_SSO_BINDING = 'urn:oasis:names:tc:SAML:2.0:bindings:HTTP-POST'

|

|

||||||

|

|

||||||

## EntityID of the IdP to use. Only needed if more than one IdP is

|

|

||||||

## in the SAML_METADATA_URL

|

|

||||||

### Default: First (only) IdP in the SAML_METADATA_URL

|

|

||||||

### Example: https://idp.example.edu/idp

|

|

||||||

#SAML_IDP_ENTITY_ID = 'https://idp.example.edu/idp'

|

|

||||||

## NameID format to request

|

|

||||||

### Default: The SAML NameID Format in the metadata if present,

|

|

||||||

### otherwise urn:oasis:names:tc:SAML:1.1:nameid-format:unspecified

|

|

||||||

### Example: urn:oid:0.9.2342.19200300.100.1.1

|

|

||||||

#SAML_NAMEID_FORMAT = 'urn:oid:0.9.2342.19200300.100.1.1'

|

|

||||||

|

|

||||||

## Attribute to use for Email address

|

|

||||||

### Default: email

|

|

||||||

### Example: urn:oid:0.9.2342.19200300.100.1.3

|

|

||||||

#SAML_ATTRIBUTE_EMAIL = 'urn:oid:0.9.2342.19200300.100.1.3'

|

|

||||||

|

|

||||||

## Attribute to use for Given name

|

|

||||||

### Default: givenname

|

|

||||||

### Example: urn:oid:2.5.4.42

|

|

||||||

#SAML_ATTRIBUTE_GIVENNAME = 'urn:oid:2.5.4.42'

|

|

||||||

|

|

||||||

## Attribute to use for Surname

|

|

||||||

### Default: surname

|

|

||||||

### Example: urn:oid:2.5.4.4

|

|

||||||

#SAML_ATTRIBUTE_SURNAME = 'urn:oid:2.5.4.4'

|

|

||||||

|

|

||||||

## Split into Given name and Surname

|

|

||||||

## Useful if your IDP only gives a display name

|

|

||||||

### Default: none

|

|

||||||

### Example: http://schemas.microsoft.com/identity/claims/displayname

|

|

||||||

#SAML_ATTRIBUTE_NAME = 'http://schemas.microsoft.com/identity/claims/displayname'

|

|

||||||

|

|

||||||

## Attribute to use for username

|

|

||||||

### Default: Use NameID instead

|

|

||||||

### Example: urn:oid:0.9.2342.19200300.100.1.1

|

|

||||||

#SAML_ATTRIBUTE_USERNAME = 'urn:oid:0.9.2342.19200300.100.1.1'

|

|

||||||

|

|

||||||

## Attribute to get admin status from

|

|

||||||

### Default: Don't control admin with SAML attribute

|

|

||||||

### Example: https://example.edu/pdns-admin

|

|

||||||

### If set, look for the value 'true' to set a user as an administrator

|

|

||||||

### If not included in assertion, or set to something other than 'true',

|

|

||||||

### the user is set as a non-administrator user.

|

|

||||||

#SAML_ATTRIBUTE_ADMIN = 'https://example.edu/pdns-admin'

|

|

||||||

|

|

||||||

## Attribute to get group from

|

|

||||||

### Default: Don't use groups from SAML attribute

|

|

||||||

### Example: https://example.edu/pdns-admin-group

|

|

||||||

#SAML_ATTRIBUTE_GROUP = 'https://example.edu/pdns-admin'

|

|

||||||

|

|

||||||

## Group namem to get admin status from

|

|

||||||

### Default: Don't control admin with SAML group

|

|

||||||

### Example: https://example.edu/pdns-admin

|

|

||||||

#SAML_GROUP_ADMIN_NAME = 'powerdns-admin'

|

|

||||||

|

|

||||||

## Attribute to get group to account mappings from

|

|

||||||

### Default: None

|

|

||||||

### If set, the user will be added and removed from accounts to match

|

|

||||||

### what's in the login assertion if they are in the required group

|

|

||||||

#SAML_GROUP_TO_ACCOUNT_MAPPING = 'dev-admins=dev,prod-admins=prod'

|

|

||||||

|

|

||||||

## Attribute to get account names from

|

|

||||||

### Default: Don't control accounts with SAML attribute

|

|

||||||

### If set, the user will be added and removed from accounts to match

|

|

||||||

### what's in the login assertion. Accounts that don't exist will

|

|

||||||

### be created and the user added to them.

|

|

||||||

SAML_ATTRIBUTE_ACCOUNT = 'https://example.edu/pdns-account'

|

|

||||||

|

|

||||||

SAML_SP_ENTITY_ID = 'http://<SAML SP Entity ID>'

|

|

||||||

SAML_SP_CONTACT_NAME = '<contact name>'

|

|

||||||

SAML_SP_CONTACT_MAIL = '<contact mail>'

|

|

||||||

#Configures if SAML tokens should be encrypted.

|

|

||||||

#If enabled a new app certificate will be generated on restart

|

|

||||||

SAML_SIGN_REQUEST = False

|

|

||||||

|

|

||||||

# Configures if you want to request the IDP to sign the message

|

|

||||||

# Default is True

|

|

||||||

#SAML_WANT_MESSAGE_SIGNED = True

|

|

||||||

|

|

||||||

#Use SAML standard logout mechanism retrieved from idp metadata

|

|

||||||

#If configured false don't care about SAML session on logout.

|

|

||||||

#Logout from PowerDNS-Admin only and keep SAML session authenticated.

|

|

||||||

SAML_LOGOUT = False

|

|

||||||

#Configure to redirect to a different url then PowerDNS-Admin login after SAML logout

|

|

||||||

#for example redirect to google.com after successful saml logout

|

|

||||||

#SAML_LOGOUT_URL = 'https://google.com'

|

|

||||||

@@ -4,12 +4,12 @@ services:

|

|||||||

powerdns-admin:

|

powerdns-admin:

|

||||||

build:

|

build:

|

||||||

context: .

|

context: .

|

||||||

dockerfile: docker/PowerDNS-Admin/Dockerfile.test

|

dockerfile: docker-test/Dockerfile

|

||||||

args:

|

args:

|

||||||

- ENVIRONMENT=test

|

- ENVIRONMENT=test

|

||||||

image: powerdns-admin-test

|

image: powerdns-admin-test

|

||||||

env_file:

|

env_file:

|

||||||

- ./env-test

|

- ./docker-test/env

|

||||||

container_name: powerdns-admin-test

|

container_name: powerdns-admin-test

|

||||||

mem_limit: 256M

|

mem_limit: 256M

|

||||||

memswap_limit: 256M

|

memswap_limit: 256M

|

||||||

@@ -33,7 +33,7 @@ services:

|

|||||||

pdns-server:

|

pdns-server:

|

||||||

build:

|

build:

|

||||||

context: .

|

context: .

|

||||||

dockerfile: docker/PowerDNS-Admin/Dockerfile.pdns.test

|

dockerfile: docker-test/Dockerfile.pdns

|

||||||

image: pdns-server-test

|

image: pdns-server-test

|

||||||

ports:

|

ports:

|

||||||

- "5053:53"

|

- "5053:53"

|

||||||

@@ -41,7 +41,7 @@ services:

|

|||||||

networks:

|

networks:

|

||||||

- default

|

- default

|

||||||

env_file:

|

env_file:

|

||||||

- ./env-test

|

- ./docker-test/env

|

||||||

|

|

||||||

networks:

|

networks:

|

||||||

default:

|

default:

|

||||||

|

|||||||

@@ -1,114 +1,20 @@

|

|||||||

version: "2.1"

|

version: "3"

|

||||||

|

|

||||||

services:

|

services:

|

||||||

powerdns-admin:

|

app:

|

||||||

build:

|

build:

|

||||||

context: .

|

context: .

|

||||||

dockerfile: docker/PowerDNS-Admin/Dockerfile

|

dockerfile: docker/Dockerfile

|

||||||

args:

|

image: powerdns-admin:latest

|

||||||

- ENVIRONMENT=${ENVIRONMENT}

|

container_name: powerdns_admin

|

||||||

image: powerdns-admin

|

|

||||||

container_name: powerdns-admin

|

|

||||||

mem_limit: 256M

|

|

||||||

memswap_limit: 256M

|

|

||||||

ports:

|

ports:

|

||||||

- "9191:9191"

|

- "9191:80"

|

||||||

volumes:

|

|

||||||

# Code

|

|

||||||

- .:/powerdns-admin/

|

|

||||||

- "./configs/${ENVIRONMENT}.py:/powerdns-admin/config.py"

|

|

||||||

# Assets dir volume

|

|

||||||

- powerdns-admin-assets:/powerdns-admin/app/static

|

|

||||||

- powerdns-admin-assets2:/powerdns-admin/node_modules

|

|

||||||

- powerdns-admin-assets3:/powerdns-admin/logs

|

|

||||||

- ./app/static/custom:/powerdns-admin/app/static/custom

|

|

||||||

logging:

|

logging:

|

||||||

driver: json-file

|

driver: json-file

|

||||||

options:

|

options:

|

||||||

max-size: 50m

|

max-size: 50m

|

||||||

networks:

|

|

||||||

- default

|

|

||||||

environment:

|

environment:

|

||||||

- ENVIRONMENT=${ENVIRONMENT}

|

- SQLALCHEMY_DATABASE_URI=mysql://pda:changeme@host.docker.internal/pda

|

||||||

- PDA_DB_HOST=${PDA_DB_HOST}

|

- GUINCORN_TIMEOUT=60

|

||||||

- PDA_DB_NAME=${PDA_DB_NAME}

|

- GUNICORN_WORKERS=2

|

||||||

- PDA_DB_USER=${PDA_DB_USER}

|

- GUNICORN_LOGLEVEL=DEBUG

|

||||||

- PDA_DB_PASSWORD=${PDA_DB_PASSWORD}

|

|

||||||

- PDA_DB_PORT=${PDA_DB_PORT}

|

|

||||||

- PDNS_HOST=${PDNS_HOST}

|

|

||||||

- PDNS_API_KEY=${PDNS_API_KEY}

|

|

||||||

- FLASK_APP=/powerdns-admin/app/__init__.py

|

|

||||||

depends_on:

|

|

||||||

powerdns-admin-mysql:

|

|

||||||

condition: service_healthy

|

|

||||||

|

|

||||||

powerdns-admin-mysql:

|

|

||||||

image: mysql/mysql-server:5.7

|

|

||||||

hostname: ${PDA_DB_HOST}

|

|

||||||

container_name: powerdns-admin-mysql

|

|

||||||

mem_limit: 256M

|

|

||||||

memswap_limit: 256M

|

|

||||||

expose:

|

|

||||||

- 3306

|

|

||||||

volumes:

|

|

||||||

- powerdns-admin-mysql-data:/var/lib/mysql

|

|

||||||

networks:

|

|

||||||

- default

|

|

||||||

environment:

|

|

||||||

- MYSQL_DATABASE=${PDA_DB_NAME}

|

|

||||||

- MYSQL_USER=${PDA_DB_USER}

|

|

||||||

- MYSQL_PASSWORD=${PDA_DB_PASSWORD}

|

|

||||||

healthcheck:

|

|

||||||

test: ["CMD", "mysqladmin" ,"ping", "-h", "localhost"]

|

|

||||||

timeout: 10s

|

|

||||||

retries: 5

|

|

||||||

|

|

||||||

pdns-server:

|

|

||||||

image: psitrax/powerdns

|

|

||||||

hostname: ${PDNS_HOST}

|

|

||||||

ports:

|

|

||||||

- "5053:53"

|

|

||||||

- "5053:53/udp"

|

|

||||||

networks:

|

|

||||||

- default

|

|

||||||

command: --api=yes --api-key=${PDNS_API_KEY} --webserver-address=0.0.0.0 --webserver-allow-from=0.0.0.0/0

|

|

||||||

environment:

|

|

||||||

- MYSQL_HOST=${PDNS_DB_HOST}

|

|

||||||

- MYSQL_USER=${PDNS_DB_USER}

|

|

||||||

- MYSQL_PASS=${PDNS_DB_PASSWORD}

|

|

||||||

- PDNS_API_KEY=${PDNS_API_KEY}

|

|

||||||

- PDNS_WEBSERVER_ALLOW_FROM=${PDNS_WEBSERVER_ALLOW_FROM}

|

|

||||||

depends_on:

|

|

||||||

pdns-mysql:

|

|

||||||

condition: service_healthy

|

|

||||||

|

|

||||||

pdns-mysql:

|

|

||||||

image: mysql/mysql-server:5.7

|

|

||||||

hostname: ${PDNS_DB_HOST}

|

|

||||||

container_name: ${PDNS_DB_HOST}

|

|

||||||

mem_limit: 256M

|

|

||||||

memswap_limit: 256M

|

|

||||||

expose:

|

|

||||||

- 3306

|

|

||||||

volumes:

|

|

||||||

- powerdns-mysql-data:/var/lib/mysql

|

|

||||||

networks:

|

|

||||||

- default

|

|

||||||

environment:

|

|

||||||

- MYSQL_DATABASE=${PDNS_DB_NAME}

|

|

||||||

- MYSQL_USER=${PDNS_DB_USER}

|

|

||||||

- MYSQL_PASSWORD=${PDNS_DB_PASSWORD}

|

|

||||||

healthcheck:

|

|

||||||

test: ["CMD", "mysqladmin" ,"ping", "-h", "localhost"]

|

|

||||||

timeout: 10s

|

|

||||||

retries: 5

|

|

||||||

|

|

||||||

networks:

|

|

||||||

default:

|

|

||||||

|

|

||||||

volumes:

|

|

||||||

powerdns-mysql-data:

|

|

||||||

powerdns-admin-mysql-data:

|

|

||||||

powerdns-admin-assets:

|

|

||||||

powerdns-admin-assets2:

|

|

||||||

powerdns-admin-assets3:

|

|

||||||

|

|||||||

33

docker-test/Dockerfile

Normal file

33

docker-test/Dockerfile

Normal file

@@ -0,0 +1,33 @@

|

|||||||

|

FROM debian:stretch-slim

|

||||||

|

LABEL maintainer="k@ndk.name"

|

||||||

|

|

||||||

|

ENV LC_ALL=en_US.UTF-8 LANG=en_US.UTF-8 LANGUAGE=en_US.UTF-8

|

||||||

|

|

||||||

|

RUN apt-get update -y \

|

||||||

|

&& apt-get install -y --no-install-recommends apt-transport-https locales locales-all python3-pip python3-setuptools python3-dev curl libsasl2-dev libldap2-dev libssl-dev libxml2-dev libxslt1-dev libxmlsec1-dev libffi-dev build-essential libmariadb-dev-compat \

|

||||||

|

&& curl -sL https://deb.nodesource.com/setup_10.x | bash - \

|

||||||

|

&& curl -sS https://dl.yarnpkg.com/debian/pubkey.gpg | apt-key add - \

|

||||||

|

&& echo "deb https://dl.yarnpkg.com/debian/ stable main" > /etc/apt/sources.list.d/yarn.list \

|

||||||

|

&& apt-get update -y \

|

||||||

|

&& apt-get install -y nodejs yarn \

|

||||||

|

&& apt-get clean -y \

|

||||||

|

&& rm -rf /var/lib/apt/lists/*

|

||||||

|

|

||||||

|

# We copy just the requirements.txt first to leverage Docker cache

|

||||||

|

COPY ./requirements.txt /app/requirements.txt

|

||||||

|

|

||||||

|

WORKDIR /app

|

||||||

|

RUN pip3 install -r requirements.txt

|

||||||

|

|

||||||

|

COPY . /app

|

||||||

|

COPY ./docker/entrypoint.sh /usr/local/bin/

|

||||||

|

RUN chmod +x /usr/local/bin/entrypoint.sh

|

||||||

|

|

||||||

|

ENV FLASK_APP=powerdnsadmin/__init__.py

|

||||||

|

RUN yarn install --pure-lockfile --production \

|

||||||

|

&& yarn cache clean \

|

||||||

|

&& flask assets build

|

||||||

|

|

||||||

|

COPY ./docker-test/wait-for-pdns.sh /opt

|

||||||

|

RUN chmod u+x /opt/wait-for-pdns.sh

|

||||||

|

CMD ["/opt/wait-for-pdns.sh", "/usr/local/bin/pytest","--capture=no","-vv"]

|

||||||

@@ -2,8 +2,8 @@ FROM ubuntu:latest

|

|||||||

|

|

||||||

RUN apt-get update && apt-get install -y pdns-backend-sqlite3 pdns-server sqlite3

|

RUN apt-get update && apt-get install -y pdns-backend-sqlite3 pdns-server sqlite3

|

||||||

|

|

||||||

COPY ./docker/PowerDNS-Admin/pdns.sqlite.sql /data/pdns.sql

|

COPY ./docker-test/pdns.sqlite.sql /data/pdns.sql

|

||||||

ADD ./docker/PowerDNS-Admin/start.sh /data/

|

ADD ./docker-test/start.sh /data/

|

||||||

|

|

||||||

RUN rm -f /etc/powerdns/pdns.d/pdns.simplebind.conf

|

RUN rm -f /etc/powerdns/pdns.d/pdns.simplebind.conf

|

||||||

RUN rm -f /etc/powerdns/pdns.d/bind.conf

|

RUN rm -f /etc/powerdns/pdns.d/bind.conf

|

||||||

33

docker/Dockerfile

Normal file

33

docker/Dockerfile

Normal file

@@ -0,0 +1,33 @@

|

|||||||

|

FROM debian:stretch-slim

|

||||||

|

LABEL maintainer="k@ndk.name"

|

||||||

|

|

||||||

|

ENV LC_ALL=en_US.UTF-8 LANG=en_US.UTF-8 LANGUAGE=en_US.UTF-8

|

||||||

|

|

||||||

|

RUN apt-get update -y \

|

||||||

|

&& apt-get install -y --no-install-recommends apt-transport-https locales locales-all python3-pip python3-setuptools python3-dev curl libsasl2-dev libldap2-dev libssl-dev libxml2-dev libxslt1-dev libxmlsec1-dev libffi-dev build-essential libmariadb-dev-compat \

|

||||||

|

&& curl -sL https://deb.nodesource.com/setup_10.x | bash - \

|

||||||

|

&& curl -sS https://dl.yarnpkg.com/debian/pubkey.gpg | apt-key add - \

|

||||||

|

&& echo "deb https://dl.yarnpkg.com/debian/ stable main" > /etc/apt/sources.list.d/yarn.list \

|

||||||

|

&& apt-get update -y \

|

||||||

|

&& apt-get install -y nodejs yarn \

|

||||||

|

&& apt-get clean -y \

|

||||||

|

&& rm -rf /var/lib/apt/lists/*

|

||||||

|

|

||||||

|

# We copy just the requirements.txt first to leverage Docker cache

|

||||||

|

COPY ./requirements.txt /app/requirements.txt

|

||||||

|

|

||||||

|

WORKDIR /app

|

||||||

|

RUN pip3 install -r requirements.txt

|

||||||

|

|

||||||

|

COPY . /app

|

||||||

|

COPY ./docker/entrypoint.sh /usr/local/bin/

|

||||||

|

RUN chmod +x /usr/local/bin/entrypoint.sh

|

||||||

|

|

||||||

|

ENV FLASK_APP=powerdnsadmin/__init__.py

|

||||||

|

RUN yarn install --pure-lockfile --production \

|

||||||

|

&& yarn cache clean \

|

||||||

|

&& flask assets build

|

||||||

|

|

||||||

|

EXPOSE 80/tcp

|

||||||

|

ENTRYPOINT ["entrypoint.sh"]

|

||||||

|

CMD ["gunicorn","powerdnsadmin:create_app()"]

|

||||||

@@ -1,48 +0,0 @@

|

|||||||

FROM ubuntu:16.04

|

|

||||||

MAINTAINER Khanh Ngo "k@ndk.name"

|

|

||||||

ARG ENVIRONMENT=development

|

|

||||||

ENV ENVIRONMENT=${ENVIRONMENT}

|

|

||||||

WORKDIR /powerdns-admin

|

|

||||||

|

|

||||||

RUN apt-get update -y

|

|

||||||

RUN apt-get install -y apt-transport-https

|

|

||||||

|

|

||||||

RUN apt-get install -y locales locales-all

|

|

||||||

ENV LC_ALL en_US.UTF-8

|

|

||||||

ENV LANG en_US.UTF-8

|

|

||||||

ENV LANGUAGE en_US.UTF-8

|

|

||||||

|

|

||||||

RUN apt-get install -y python3-pip python3-dev supervisor curl mysql-client

|

|

||||||

|

|

||||||

# Install node 10.x

|

|

||||||

RUN curl -sL https://deb.nodesource.com/setup_10.x | bash -

|

|

||||||

RUN apt-get install -y nodejs

|

|

||||||

|

|

||||||

RUN curl -sS https://dl.yarnpkg.com/debian/pubkey.gpg | apt-key add -

|

|

||||||

RUN echo "deb https://dl.yarnpkg.com/debian/ stable main" > /etc/apt/sources.list.d/yarn.list

|

|

||||||

|

|

||||||

# Install yarn

|

|

||||||

RUN apt-get update -y

|

|

||||||

RUN apt-get install -y yarn

|

|

||||||

|

|

||||||

# Install Netcat for DB healthcheck

|

|

||||||

RUN apt-get install -y netcat

|

|

||||||

|

|

||||||

# lib for building mysql db driver

|

|

||||||

RUN apt-get install -y libmysqlclient-dev

|

|

||||||

|

|

||||||

# lib for building ldap and ssl-based application

|

|

||||||

RUN apt-get install -y libsasl2-dev libldap2-dev libssl-dev

|

|

||||||

|

|

||||||

# lib for building python3-saml

|

|

||||||

RUN apt-get install -y libxml2-dev libxslt1-dev libxmlsec1-dev libffi-dev pkg-config

|

|

||||||

|

|

||||||

COPY ./requirements.txt /powerdns-admin/requirements.txt

|

|

||||||

RUN pip3 install -r requirements.txt

|

|

||||||

|

|

||||||

ADD ./supervisord.conf /etc/supervisord.conf

|

|

||||||

ADD . /powerdns-admin/

|

|

||||||

COPY ./configs/${ENVIRONMENT}.py /powerdns-admin/config.py

|

|

||||||

COPY ./docker/PowerDNS-Admin/entrypoint.sh /entrypoint.sh

|

|

||||||

|

|

||||||

ENTRYPOINT ["/entrypoint.sh"]

|

|

||||||

@@ -1,46 +0,0 @@

|

|||||||

FROM ubuntu:16.04

|

|

||||||

MAINTAINER Khanh Ngo "k@ndk.name"

|

|

||||||

ARG ENVIRONMENT=development

|

|

||||||

ENV ENVIRONMENT=${ENVIRONMENT}

|

|

||||||

WORKDIR /powerdns-admin

|

|

||||||

|

|

||||||

RUN apt-get update -y

|

|

||||||

RUN apt-get install -y apt-transport-https

|

|

||||||

|

|

||||||

RUN apt-get install -y locales locales-all

|

|

||||||

ENV LC_ALL en_US.UTF-8

|

|

||||||

ENV LANG en_US.UTF-8

|

|

||||||

ENV LANGUAGE en_US.UTF-8

|

|

||||||

|

|

||||||

RUN apt-get install -y python3-pip python3-dev supervisor curl mysql-client

|

|

||||||

|

|

||||||

RUN curl -sL https://deb.nodesource.com/setup_10.x | bash -

|

|

||||||

|

|

||||||

RUN apt-get install -y nodejs

|

|

||||||

|

|

||||||

RUN curl -sS https://dl.yarnpkg.com/debian/pubkey.gpg | apt-key add -

|

|

||||||

RUN echo "deb https://dl.yarnpkg.com/debian/ stable main" > /etc/apt/sources.list.d/yarn.list

|

|

||||||

|

|

||||||

# Install yarn

|

|

||||||

RUN apt-get update -y

|

|

||||||

RUN apt-get install -y yarn

|

|

||||||

|

|

||||||

# Install Netcat for DB healthcheck

|

|

||||||

RUN apt-get install -y netcat

|

|

||||||

|

|

||||||

# lib for building mysql db driver

|

|

||||||

RUN apt-get install -y libmysqlclient-dev

|

|

||||||

|

|

||||||

# lib for building ldap and ssl-based application

|

|

||||||

RUN apt-get install -y libsasl2-dev libldap2-dev libssl-dev

|

|

||||||

|

|

||||||

# lib for building python3-saml

|

|

||||||

RUN apt-get install -y libxml2-dev libxslt1-dev libxmlsec1-dev libffi-dev pkg-config

|

|

||||||

|

|

||||||

COPY ./requirements.txt /powerdns-admin/requirements.txt

|

|

||||||

COPY ./docker/PowerDNS-Admin/wait-for-pdns.sh /opt

|

|

||||||

RUN chmod u+x /opt/wait-for-pdns.sh

|

|

||||||

|

|

||||||

RUN pip3 install -r requirements.txt

|

|

||||||

|

|

||||||

CMD ["/opt/wait-for-pdns.sh", "/usr/local/bin/pytest","--capture=no","-vv"]

|

|

||||||

@@ -1,71 +0,0 @@

|

|||||||

#!/bin/bash

|

|

||||||

|

|

||||||

set -o errexit

|

|

||||||

set -o pipefail

|

|

||||||

|

|

||||||

|

|

||||||

# == Vars

|

|

||||||

#

|

|

||||||

DB_MIGRATION_DIR='/powerdns-admin/migrations'

|

|

||||||

if [[ -z ${PDNS_PROTO} ]];

|

|

||||||

then PDNS_PROTO="http"

|

|

||||||

fi

|

|

||||||

|

|

||||||

if [[ -z ${PDNS_PORT} ]];

|

|

||||||

then PDNS_PORT=8081

|

|

||||||

fi

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

# Wait for us to be able to connect to MySQL before proceeding

|

|

||||||

echo "===> Waiting for $PDA_DB_HOST MySQL service"

|

|

||||||

until nc -zv \

|

|

||||||

$PDA_DB_HOST \

|

|

||||||

$PDA_DB_PORT;

|

|

||||||

do

|

|

||||||

echo "MySQL ($PDA_DB_HOST) is unavailable - sleeping"

|

|

||||||

sleep 1

|

|

||||||

done

|

|

||||||

|

|

||||||

|

|

||||||

echo "===> DB management"

|

|

||||||

# Go in Workdir

|

|

||||||

cd /powerdns-admin

|

|

||||||

|

|

||||||

if [ ! -d "${DB_MIGRATION_DIR}" ]; then

|

|

||||||

echo "---> Running DB Init"

|

|

||||||

flask db init --directory ${DB_MIGRATION_DIR}

|

|

||||||