mirror of

https://github.com/cwinfo/powerdns-admin.git

synced 2025-04-28 06:08:52 +00:00

Compare commits

793 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

fa4861a6ed | ||

|

|

d255cb3d16 | ||

|

|

af462a9bae | ||

|

|

b47b080692 | ||

|

|

876bc78ba7 | ||

|

|

9c457f1db0 | ||

|

|

077f893e41 | ||

|

|

51bdeca218 | ||

|

|

3247869df9 | ||

|

|

7998dd80c9 | ||

|

|

4b57254ae4 | ||

|

|

2a1f8484e5 | ||

|

|

5904be885a | ||

|

|

fdc1ba59e7 | ||

|

|

aa4d97da9c | ||

|

|

ecdbfce256 | ||

|

|

eb3243a075 | ||

|

|

69dda3a5de | ||

|

|

e3e773cc85 | ||

|

|

577b350916 | ||

|

|

8532ca5368 | ||

|

|

9415b4663f | ||

|

|

bbe8d69345 | ||

|

|

59a32a148f | ||

|

|

11371e1b81 | ||

|

|

3caded9b7f | ||

|

|

7b6aafbb2c | ||

|

|

06fa9537a8 | ||

|

|

17e6adb8a7 | ||

|

|

5d4e560836 | ||

|

|

6f47cbd91b | ||

|

|

66c262c57d | ||

|

|

ddb3151b61 | ||

|

|

b494423e83 | ||

|

|

18f38fd1ca | ||

|

|

48f7f2d19f | ||

|

|

4dab950efc | ||

|

|

b347e3df55 | ||

|

|

24c08a269e | ||

|

|

09014bf4a9 | ||

|

|

a85827f302 | ||

|

|

28c63abea4 | ||

|

|

5147d72999 | ||

|

|

457c704de3 | ||

|

|

447bb14742 | ||

|

|

67085653ae | ||

|

|

0472aba25e | ||

|

|

4442577b0b | ||

|

|

c52bdd0daf | ||

|

|

7fcd2b8aa6 | ||

|

|

ad9e4938bc | ||

|

|

c03f5c4f9e | ||

|

|

45f1ba7b82 | ||

|

|

08c39c64c2 | ||

|

|

8b51313027 | ||

|

|

fa7b146c78 | ||

|

|

8e53e4ae48 | ||

|

|

e88a836f26 | ||

|

|

6fc2279c3b | ||

|

|

953221578b | ||

|

|

11be125e3b | ||

|

|

8aef6fe8f1 | ||

|

|

9350c98ea2 | ||

|

|

86e75c8b6b | ||

|

|

f0e32a035d | ||

|

|

055ac761e3 | ||

|

|

66f422754b | ||

|

|

9193317d00 | ||

|

|

c74c06c327 | ||

|

|

601539f16e | ||

|

|

ccd7373efe | ||

|

|

c842d09195 | ||

|

|

9ddfde02b8 | ||

|

|

7eee3134d4 | ||

|

|

d773e078f5 | ||

|

|

c6a63053f3 | ||

|

|

84f84f2809 | ||

|

|

8cfc62e9d0 | ||

|

|

d7f3610b51 | ||

|

|

8108caf96a | ||

|

|

003ee07596 | ||

|

|

c778004287 | ||

|

|

a8c61abef6 | ||

|

|

606b0ccc84 | ||

|

|

b60a74d764 | ||

|

|

e45324c619 | ||

|

|

0ccdf9ac0d | ||

|

|

a8895ffe7a | ||

|

|

1081751c41 | ||

|

|

f66d64ecbc | ||

|

|

71405549a7 | ||

|

|

db30c77584 | ||

|

|

c98c174c23 | ||

|

|

feb62cf39f | ||

|

|

4e54a2bb3f | ||

|

|

c7aba5626d | ||

|

|

9f076330d6 | ||

|

|

69ce3cb88a | ||

|

|

e132ced669 | ||

|

|

ea10b814d6 | ||

|

|

cf62890fcf | ||

|

|

a4b6fba2be | ||

|

|

6a19ed2903 | ||

|

|

2e30b83545 | ||

|

|

022e780d77 | ||

|

|

0912dd2805 | ||

|

|

827da59ae2 | ||

|

|

737e104912 | ||

|

|

ece9626212 | ||

|

|

9168dd99e0 | ||

|

|

a46ab760fd | ||

|

|

ee9012fa24 | ||

|

|

ab4495dc46 | ||

|

|

f5565bef23 | ||

|

|

d27fa2aa96 | ||

|

|

84d792ac07 | ||

|

|

fe10665e19 | ||

|

|

bae746cffe | ||

|

|

cacfc042e2 | ||

|

|

a2429ad9d6 | ||

|

|

1f6a0504c8 | ||

|

|

19335439bd | ||

|

|

e6c0b4c15f | ||

|

|

0d0339a316 | ||

|

|

bb34daa333 | ||

|

|

53cfa4fdaa | ||

|

|

e0dffff325 | ||

|

|

b86282b442 | ||

|

|

7b91804a8e | ||

|

|

15e29b6771 | ||

|

|

004d1d40c9 | ||

|

|

a954800869 | ||

|

|

92033aa109 | ||

|

|

271f483062 | ||

|

|

1762a5481b | ||

|

|

419bf35892 | ||

|

|

a187d70470 | ||

|

|

f6009ba47b | ||

|

|

55faefeedc | ||

|

|

236487eada | ||

|

|

976f52ce7a | ||

|

|

4e54b5ae0a | ||

|

|

ba19943c64 | ||

|

|

6b9638ca19 | ||

|

|

e11f55523d | ||

|

|

506a75300a | ||

|

|

80b191bc0d | ||

|

|

5acbabaed5 | ||

|

|

0a66089cad | ||

|

|

522705a52b | ||

|

|

519b8579db | ||

|

|

e7547ff8d3 | ||

|

|

b71f9ae5b4 | ||

|

|

78e8d9950d | ||

|

|

ca4bf18f67 | ||

|

|

1918f713e1 | ||

|

|

33614ae102 | ||

|

|

d3da1e43ed | ||

|

|

138532fb95 | ||

|

|

c24b4b047d | ||

|

|

defb3e5a48 | ||

|

|

f44ff7d261 | ||

|

|

340e84ab89 | ||

|

|

2606ad0395 | ||

|

|

d716f8cc88 | ||

|

|

1aac3c0f0d | ||

|

|

2ca712af49 | ||

|

|

92f5071a84 | ||

|

|

763f06a830 | ||

|

|

98e6b8946f | ||

|

|

3294ed80f3 | ||

|

|

1bfb5429a1 | ||

|

|

687571101f | ||

|

|

1358e47b5b | ||

|

|

ae16e9868a | ||

|

|

a2e5c7d5bc | ||

|

|

fc6d8505b7 | ||

|

|

31c8577409 | ||

|

|

6681d0f5b0 | ||

|

|

0f7c2da814 | ||

|

|

23d6dd1fde | ||

|

|

4b3759d140 | ||

|

|

5c6cf77996 | ||

|

|

a25dda8ac1 | ||

|

|

4a6d31cfa4 | ||

|

|

78f0332a2d | ||

|

|

4fa8bf2556 | ||

|

|

b23523db4b | ||

|

|

92be9567de | ||

|

|

64017195da | ||

|

|

fc14e9189d | ||

|

|

1cea4b7ce3 | ||

|

|

bb6d2d0497 | ||

|

|

7489e2c9a2 | ||

|

|

a9e18ec594 | ||

|

|

a2d1179fd2 | ||

|

|

34902f6cf9 | ||

|

|

17e3a8f942 | ||

|

|

73447d396a | ||

|

|

24f94abc32 | ||

|

|

57b4457add | ||

|

|

61e607fb3f | ||

|

|

4751ebed3e | ||

|

|

7e2fa1bfaa | ||

|

|

4420621cfe | ||

|

|

6eef5eb59c | ||

|

|

a2ef456ad7 | ||

|

|

3e9e73fb3a | ||

|

|

6a5bc8adeb | ||

|

|

6a402969ec | ||

|

|

695d746295 | ||

|

|

bd30c73ca4 | ||

|

|

0ac7a5a453 | ||

|

|

84cfd165b4 | ||

|

|

ee68b18e27 | ||

|

|

f09d37ae42 | ||

|

|

1afe9b4908 | ||

|

|

c61489adfc | ||

|

|

7ce1f09522 | ||

|

|

369188e80e | ||

|

|

fd30e3ff49 | ||

|

|

b8ab0d3478 | ||

|

|

16de70008c | ||

|

|

22370d0a57 | ||

|

|

5ed8d0c2f0 | ||

|

|

87891a3eb9 | ||

|

|

4c24fbaec6 | ||

|

|

05e2f13701 | ||

|

|

dd867eb4e8 | ||

|

|

7ef6ee4422 | ||

|

|

c0f1698a9a | ||

|

|

cb929c3265 | ||

|

|

2e61a1d44a | ||

|

|

913528d08f | ||

|

|

b1b2a0c7b5 | ||

|

|

ff2b532c29 | ||

|

|

863b0a021d | ||

|

|

afcf0fbea7 | ||

|

|

c617aa1483 | ||

|

|

356667f989 | ||

|

|

1d6fdb1c23 | ||

|

|

26f3f79388 | ||

|

|

e7fc082b60 | ||

|

|

6be6f3d389 | ||

|

|

c707f1e1c5 | ||

|

|

aa70951964 | ||

|

|

68d9fb3755 | ||

|

|

622003a46e | ||

|

|

d055fd83c5 | ||

|

|

4933351ac1 | ||

|

|

fcda4977e2 | ||

|

|

5f2fc514df | ||

|

|

9003b3f6c8 | ||

|

|

840076dae3 | ||

|

|

f5ddcc5809 | ||

|

|

7f6924a966 | ||

|

|

f4f1f31575 | ||

|

|

062cb032c5 | ||

|

|

1a5a11bfa3 | ||

|

|

fc39cc40ee | ||

|

|

934e4a7af3 | ||

|

|

8a40d21ea4 | ||

|

|

70073b9267 | ||

|

|

68fe7c0e56 | ||

|

|

ec687b13a5 | ||

|

|

dc69f00094 | ||

|

|

524b6c6883 | ||

|

|

3688add76a | ||

|

|

077bbb813c | ||

|

|

9678b64ad7 | ||

|

|

50339fda55 | ||

|

|

1840a4ea60 | ||

|

|

10f5bb7dc1 | ||

|

|

df94baa81e | ||

|

|

81bd5804e0 | ||

|

|

e00f3ec47e | ||

|

|

63db17ec21 | ||

|

|

4a5b78c3ef | ||

|

|

5346bee291 | ||

|

|

74935359e4 | ||

|

|

e3e5c265f4 | ||

|

|

5ad384bfe9 | ||

|

|

1cda4f774a | ||

|

|

c6ae393894 | ||

|

|

45d66b057a | ||

|

|

8fbf40a9d1 | ||

|

|

c155a31857 | ||

|

|

b04ab933c6 | ||

|

|

8b0f005006 | ||

|

|

564e393292 | ||

|

|

182ef10a87 | ||

|

|

b01bf41bf1 | ||

|

|

b98bcc3bec | ||

|

|

4d0cf87338 | ||

|

|

3ee63aca8c | ||

|

|

32c53cf2a1 | ||

|

|

fcdbc45de7 | ||

|

|

78b88c8e0e | ||

|

|

40034b2f26 | ||

|

|

be827c2362 | ||

|

|

65b7b9a5c3 | ||

|

|

b3c80df674 | ||

|

|

f977a42cea | ||

|

|

2980e5cec9 | ||

|

|

0e94e18485 | ||

|

|

bd94c97486 | ||

|

|

764b83b5d5 | ||

|

|

772c1129f5 | ||

|

|

4da10a9d00 | ||

|

|

3ec66c359b | ||

|

|

4bd2519a76 | ||

|

|

25dcc81e03 | ||

|

|

09127fb326 | ||

|

|

a5d69e3e40 | ||

|

|

5a4279d7b8 | ||

|

|

db70e34c98 | ||

|

|

4a12d62828 | ||

|

|

9ac81363e3 | ||

|

|

0e67366c5f | ||

|

|

8c6fc5e262 | ||

|

|

a42d610759 | ||

|

|

e5269b5626 | ||

|

|

7635686c43 | ||

|

|

4d64076dac | ||

|

|

fe49651e81 | ||

|

|

f1d17c166a | ||

|

|

c4d9bf3a9c | ||

|

|

761909f0f8 | ||

|

|

e960326a58 | ||

|

|

f48a6b8209 | ||

|

|

14e534468a | ||

|

|

67040ad9c2 | ||

|

|

62018686f5 | ||

|

|

65bfc53acb | ||

|

|

55e4f5f829 | ||

|

|

fd1bc4afa5 | ||

|

|

6e10f97e9d | ||

|

|

75e262e7e9 | ||

|

|

9548cbce1c | ||

|

|

ec28e76ff5 | ||

|

|

b52b7d7e4f | ||

|

|

b4a354b0f8 | ||

|

|

c0799b95f8 | ||

|

|

abf1f4eca3 | ||

|

|

1cd5ce9ccc | ||

|

|

4a5db674f4 | ||

|

|

49bc8e948d | ||

|

|

4f83879e95 | ||

|

|

d70ded18c2 | ||

|

|

58aabacd91 | ||

|

|

bad36b5e75 | ||

|

|

e34de31186 | ||

|

|

7a61c56c49 | ||

|

|

516bc52c2f | ||

|

|

839c1ecf17 | ||

|

|

e2ad3e2001 | ||

|

|

35493fc218 | ||

|

|

8ae8d33c12 | ||

|

|

47b50e5e1e | ||

|

|

d2f135cc6e | ||

|

|

e82759cbc4 | ||

|

|

d12f03c734 | ||

|

|

1e86ef676a | ||

|

|

929cb6302d | ||

|

|

2ff01fbfe9 | ||

|

|

9a7bd27fe3 | ||

|

|

9b696a42a4 | ||

|

|

d0961ca5e7 | ||

|

|

a368124040 | ||

|

|

62d95e874a | ||

|

|

e1bbe10fc3 | ||

|

|

0418edddd9 | ||

|

|

ef3880f76d | ||

|

|

145358113d | ||

|

|

c27bf53445 | ||

|

|

5cdacc2e71 | ||

|

|

2a3ffe8481 | ||

|

|

f1b6bef1ab | ||

|

|

c825a148f2 | ||

|

|

ba14d52c8d | ||

|

|

e025bc7343 | ||

|

|

f888bd79f8 | ||

|

|

e0f939813e | ||

|

|

48f80b37ed | ||

|

|

642fb1605d | ||

|

|

7221271a7b | ||

|

|

75b33b66b7 | ||

|

|

187b55e23a | ||

|

|

16d7a4f71e | ||

|

|

d6605790bd | ||

|

|

c00ddea2fc | ||

|

|

c23e89bde3 | ||

|

|

02700ee7b0 | ||

|

|

0568a90ec1 | ||

|

|

d38a919644 | ||

|

|

ac786f45be | ||

|

|

7f25e3b555 | ||

|

|

e411bc9f19 | ||

|

|

c5b9e24604 | ||

|

|

91c1907486 | ||

|

|

b607c1b7ff | ||

|

|

d50d57bc70 | ||

|

|

04ee128161 | ||

|

|

51249aecd3 | ||

|

|

948973ac83 | ||

|

|

0c42bdad5f | ||

|

|

246ad7f7d2 | ||

|

|

18bc336d7a | ||

|

|

bb29c27430 | ||

|

|

debeda5b74 | ||

|

|

c02cb3b7fe | ||

|

|

9088f93233 | ||

|

|

b163e517bb | ||

|

|

53f6f3186e | ||

|

|

4de7bbe354 | ||

|

|

7e973c7219 | ||

|

|

c7eaec27d8 | ||

|

|

1d885278d4 | ||

|

|

7d153932b3 | ||

|

|

8dd03a4d85 | ||

|

|

33ec2acd3f | ||

|

|

eb16353476 | ||

|

|

32e19777cc | ||

|

|

8d849ee2a1 | ||

|

|

e920bf5009 | ||

|

|

b9eb593acd | ||

|

|

44cf98a159 | ||

|

|

0404748e6e | ||

|

|

3aa6d1f258 | ||

|

|

644be65495 | ||

|

|

3a6d173d05 | ||

|

|

89f3d4d01a | ||

|

|

96a88d918c | ||

|

|

f6c49c379d | ||

|

|

30ed68471e | ||

|

|

8373363c4d | ||

|

|

1a05518018 | ||

|

|

ff671ebabe | ||

|

|

9a38e1758f | ||

|

|

c03f799c4a | ||

|

|

d0290ac469 | ||

|

|

4d529ec1d6 | ||

|

|

b0159beaec | ||

|

|

dcbc4c3f7e | ||

|

|

94ce26eaad | ||

|

|

cc63d069f6 | ||

|

|

6f450457ef | ||

|

|

89d0ab12f5 | ||

|

|

23274301f8 | ||

|

|

10fd8b1563 | ||

|

|

650ea7660b | ||

|

|

12892d70a5 | ||

|

|

97a79645b0 | ||

|

|

52169f698c | ||

|

|

8d5b92402d | ||

|

|

23e0fdbedf | ||

|

|

ce4447bb12 | ||

|

|

4e2ea4bc5e | ||

|

|

2565e4faff | ||

|

|

dfdb0dca17 | ||

|

|

48c303dd84 | ||

|

|

dec457e2ea | ||

|

|

4940e280bb | ||

|

|

81020fe2b5 | ||

|

|

56ee0d674e | ||

|

|

02e4fcc20a | ||

|

|

45071c3a9f | ||

|

|

e810dc21e0 | ||

|

|

c90592e039 | ||

|

|

cbcb4dfb5c | ||

|

|

3889ceaf4c | ||

|

|

ceedf895d8 | ||

|

|

58d0d6b71d | ||

|

|

e6f6222762 | ||

|

|

54775e6c69 | ||

|

|

02b7bda292 | ||

|

|

33277a316d | ||

|

|

022e87615d | ||

|

|

4f656d827e | ||

|

|

cec8003379 | ||

|

|

542af959e1 | ||

|

|

0e83a1f27e | ||

|

|

b1a3a98692 | ||

|

|

8b986db2ac | ||

|

|

5ab04509c3 | ||

|

|

e06fcd75ce | ||

|

|

92ef3d8610 | ||

|

|

a5fdd8ffc4 | ||

|

|

496222e6b7 | ||

|

|

9a3fdfc986 | ||

|

|

3c0b0a1b2d | ||

|

|

4864bff51d | ||

|

|

5f643ccb78 | ||

|

|

2cd8f60f8d | ||

|

|

7873e5f3f8 | ||

|

|

099579de11 | ||

|

|

e823f079b7 | ||

|

|

09b661a8a3 | ||

|

|

117659dd31 | ||

|

|

d2cb80f747 | ||

|

|

fa6c58978b | ||

|

|

be933db09a | ||

|

|

a1619cfde9 | ||

|

|

9f8c416432 | ||

|

|

6c65265d1c | ||

|

|

3c5b883405 | ||

|

|

d6838cf802 | ||

|

|

83e2d3f655 | ||

|

|

1cd14210b9 | ||

|

|

2129c050e8 | ||

|

|

4952f2de06 | ||

|

|

b5661b61a0 | ||

|

|

55537e5bce | ||

|

|

dc495ce426 | ||

|

|

2656242b45 | ||

|

|

2dec2ad204 | ||

|

|

19e5750974 | ||

|

|

7a2f83a888 | ||

|

|

d2c0c94e61 | ||

|

|

76315aac6d | ||

|

|

fb387eb570 | ||

|

|

e0970541b4 | ||

|

|

2c34307365 | ||

|

|

57b0927718 | ||

|

|

5a58e70e8c | ||

|

|

16fbf412d8 | ||

|

|

a7f55dec17 | ||

|

|

59ab3dcecd | ||

|

|

254c00ae92 | ||

|

|

3bcda68df9 | ||

|

|

1ced360e5f | ||

|

|

c5524dc909 | ||

|

|

fc01be4cad | ||

|

|

9a4acf5305 | ||

|

|

3e68044420 | ||

|

|

0bc7f2765b | ||

|

|

c7dbb33dd7 | ||

|

|

99370a9afb | ||

|

|

31fd350f01 | ||

|

|

4f177407dd | ||

|

|

45e05f9487 | ||

|

|

0bdd09b3f1 | ||

|

|

6babb1cd03 | ||

|

|

3e9fc1f8fc | ||

|

|

88b7331db1 | ||

|

|

2c7c75b3a6 | ||

|

|

7df3f03362 | ||

|

|

4584b2aa24 | ||

|

|

370aad4dfa | ||

|

|

305e529cfe | ||

|

|

d259a6494e | ||

|

|

5f750d1bb8 | ||

|

|

f6bca2c999 | ||

|

|

3cdf2b6b7c | ||

|

|

25ebbf132c | ||

|

|

f6289d140c | ||

|

|

d88da0fde3 | ||

|

|

d25a22272e | ||

|

|

f8048bf6aa | ||

|

|

cb835978df | ||

|

|

846c03f154 | ||

|

|

41a3995865 | ||

|

|

4fd1b10018 | ||

|

|

9bf74a6baf | ||

|

|

4383c337d4 | ||

|

|

5f304ee29a | ||

|

|

204c996c81 | ||

|

|

3c68b611c6 | ||

|

|

cfab13824d | ||

|

|

6a2ba1b1c3 | ||

|

|

e6f6f9cea4 | ||

|

|

e7fbc7af37 | ||

|

|

41642fcea4 | ||

|

|

18150eea34 | ||

|

|

34be227381 | ||

|

|

289faa5019 | ||

|

|

a88f4a66c6 | ||

|

|

6908f1d209 | ||

|

|

5036619a67 | ||

|

|

9890ddfa64 | ||

|

|

dac232147e | ||

|

|

35cbc59016 | ||

|

|

3a8ad7c444 | ||

|

|

b809308d31 | ||

|

|

607caa1a2d | ||

|

|

b795f1eadf | ||

|

|

fee26b84ba | ||

|

|

54b2c5918f | ||

|

|

674704609b | ||

|

|

af902f24a2 | ||

|

|

52b704baeb | ||

|

|

1a77524447 | ||

|

|

ae2ad6527a | ||

|

|

3e462dab17 | ||

|

|

2c0225e961 | ||

|

|

a87b931520 | ||

|

|

eb13b37e09 | ||

|

|

a3c50828a6 | ||

|

|

beed738d02 | ||

|

|

81f158d9bc | ||

|

|

83d2f3c791 | ||

|

|

bf83e68a4b | ||

|

|

1926b862b8 | ||

|

|

1112105683 | ||

|

|

2a75013de4 | ||

|

|

9d7d701cd9 | ||

|

|

3aba0693c4 | ||

|

|

88c0aaea27 | ||

|

|

bcc8441779 | ||

|

|

41343fd598 | ||

|

|

f98326ea90 | ||

|

|

0f1102a07b | ||

|

|

88df88f30b | ||

|

|

259bd0a906 | ||

|

|

06c12cc3ac | ||

|

|

1bee833326 | ||

|

|

e81453c5e3 | ||

|

|

2020055ab2 | ||

|

|

715c6b76cd | ||

|

|

83ed5cfb28 | ||

|

|

8c85e80c2b | ||

|

|

e4c8c3892f | ||

|

|

9221d58a1b | ||

|

|

5b36ad034d | ||

|

|

0dfcdb6c3e | ||

|

|

70450315ba | ||

|

|

1961581527 | ||

|

|

8b105d8aff | ||

|

|

3d2ad1abc0 | ||

|

|

b3271e84d6 | ||

|

|

6579c9e830 | ||

|

|

564ec6086d | ||

|

|

fec649b747 | ||

|

|

0e2cd063c5 | ||

|

|

68045cc60c | ||

|

|

fa9bdcfde0 | ||

|

|

64f7968af9 | ||

|

|

06ffee18a0 | ||

|

|

9e999e7202 | ||

|

|

c8d14d91fe | ||

|

|

82f03a4de2 | ||

|

|

26c60f175d | ||

|

|

fc56a168c8 | ||

|

|

5040cf5282 | ||

|

|

44c9aff5db | ||

|

|

3df36adbf4 | ||

|

|

191e919626 | ||

|

|

40deb3c145 | ||

|

|

4d6c6224b4 | ||

|

|

4958423cc7 | ||

|

|

f41696c310 | ||

|

|

e891333971 | ||

|

|

c9c82d4244 | ||

|

|

bd92c5946c | ||

|

|

ee0511ff4c | ||

|

|

098224eed1 | ||

|

|

9e90dde144 | ||

|

|

0ab2610064 | ||

|

|

9c62208c2e | ||

|

|

8cf2985335 | ||

|

|

33f1c6ad61 | ||

|

|

b534eadf19 | ||

|

|

e596de37f4 | ||

|

|

930932d131 | ||

|

|

13ff4df9f9 | ||

|

|

c6de972ed8 | ||

|

|

bff020443f | ||

|

|

17b4269e1b | ||

|

|

be7b657437 | ||

|

|

74efcc7cf7 | ||

|

|

c9d97642b3 | ||

|

|

35f2fde0a8 | ||

|

|

063d259af8 | ||

|

|

60e58a3895 | ||

|

|

5d8e277b3f | ||

|

|

fcb8287f14 | ||

|

|

84a183d913 | ||

|

|

6ba1254759 | ||

|

|

10603fbb36 | ||

|

|

e21f53085d | ||

|

|

36cee8cddc | ||

|

|

b9cf7245a5 | ||

|

|

6982e0107c | ||

|

|

e2fe84a7c5 | ||

|

|

cd94b5c0ac | ||

|

|

98bd9634a4 | ||

|

|

0b2ad520b7 | ||

|

|

302e793665 | ||

|

|

328780e2d4 | ||

|

|

ca4c145a18 | ||

|

|

7808febad8 | ||

|

|

9ef0f2b8d6 | ||

|

|

94a923a965 | ||

|

|

eb70f6a066 | ||

|

|

0da9b2185e | ||

|

|

07f0d215a7 | ||

|

|

fc8367535b | ||

|

|

d2f35a4059 | ||

|

|

737e1fb93b | ||

|

|

f0008ce401 | ||

|

|

6f12b783a8 | ||

|

|

51a7f636b0 | ||

|

|

9f46188c7e | ||

|

|

caa48b7fe5 | ||

|

|

591055d4aa | ||

|

|

940551e99e | ||

|

|

f45ff2ce03 | ||

|

|

6c1dfd2408 | ||

|

|

701a442d12 | ||

|

|

a3b70a8f47 | ||

|

|

1332c8d29d | ||

|

|

b3f9b4a2b0 | ||

|

|

bfaf5655ae | ||

|

|

dd04a837bb | ||

|

|

5bb1a7ee29 | ||

|

|

c85a5dac24 | ||

|

|

3081036c2c | ||

|

|

c7b4aa3434 | ||

|

|

e7d5a3aba0 | ||

|

|

20b866a784 | ||

|

|

1662a812ba | ||

|

|

c49df09ac8 | ||

|

|

924537b468 | ||

|

|

4f8a547d47 | ||

|

|

ee9f568a8d | ||

|

|

d7ae34ed53 | ||

|

|

1c9ca60508 | ||

|

|

0e655c1357 | ||

|

|

ba2423d6f5 | ||

|

|

46e51f16cb | ||

|

|

b8ee91ab9a | ||

|

|

c246775ffe | ||

|

|

f96103db79 | ||

|

|

bf83662108 | ||

|

|

1f34dbf810 | ||

|

|

b7197948c1 | ||

|

|

ddf2d4788b | ||

|

|

1ec6b76f89 | ||

|

|

4ce1b71c57 | ||

|

|

79457bdc85 | ||

|

|

282c630eb8 | ||

|

|

10dc2b0273 | ||

|

|

993e02b635 | ||

|

|

07c71fb0bf | ||

|

|

c4a9498898 | ||

|

|

6e04d0419b | ||

|

|

9c00e48f0f | ||

|

|

d6e64dce8e | ||

|

|

b069cea8d1 | ||

|

|

fd933f8dbc | ||

|

|

0505b934a1 | ||

|

|

083a023e57 | ||

|

|

054e0e6eba | ||

|

|

c13dd2d835 | ||

|

|

567f66fbde | ||

|

|

ff5270fbad | ||

|

|

92bad7b11c | ||

|

|

43a6e46e66 | ||

|

|

ee72fdf9c2 | ||

|

|

8f73512d2e | ||

|

|

700fa0d9ce | ||

|

|

00dc23f21b | ||

|

|

36fdb3733f | ||

|

|

ce60ca0b9d | ||

|

|

b197491a86 | ||

|

|

d23a57da50 | ||

|

|

4180882fb7 | ||

|

|

bbbcf271fe | ||

|

|

32983635c6 | ||

|

|

f3a98eb692 | ||

|

|

39cddd3b34 | ||

|

|

b66b37ecfd | ||

|

|

5f10f739ea | ||

|

|

98db953820 | ||

|

|

44c4531f02 | ||

|

|

86700f8fd7 | ||

|

|

46993e08c0 | ||

|

|

4c19f95928 | ||

|

|

3a4efebf95 | ||

|

|

7f86730909 | ||

|

|

8f6a800836 | ||

|

|

3cd98251b3 | ||

|

|

54b257768f | ||

|

|

718b41e3d1 | ||

|

|

dd0a5f6326 | ||

|

|

c3d438842f | ||

|

|

33e7ffb747 | ||

|

|

2c18e5c88f | ||

|

|

2917c47fd1 | ||

|

|

c6e0293177 | ||

|

|

942482b706 | ||

|

|

4d1db72699 | ||

|

|

680e4cf431 |

.github

FUNDING.yml

.gitignore.travis.ymlLICENSEREADME.mdSECURITY.mdVERSIONISSUE_TEMPLATE

PULL_REQUEST_TEMPLATE.mdSUPPORT.mddependabot.ymllabels.ymlstale.ymlworkflows

configs

deploy

auto-setup

docker

kubernetes

docker-test

docker

docs

API.mdCODE_OF_CONDUCT.mdCONTRIBUTING.md

announcements

oauth.mdscreenshots

wiki

README.md

configuration

Configure-Active-Directory-Authentication-using-Group-Security.mdEnvironment-variables.mdGetting-started.mdbasic_settings.md

database-setup

debug

features

images

readme_screenshots

webui

install

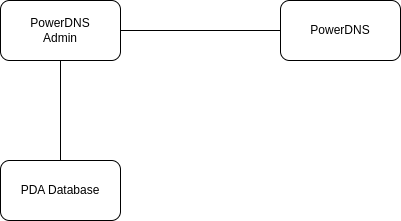

Architecture.pngGeneral.mdRunning-PowerDNS-Admin-as-a-service-(Systemd).mdRunning-PowerDNS-Admin-on-Centos-7.mdRunning-PowerDNS-Admin-on-Docker.mdRunning-PowerDNS-Admin-on-Fedora-23.mdRunning-PowerDNS-Admin-on-Fedora-30.mdRunning-PowerDNS-Admin-on-Ubuntu-or-Debian.mdRunning-on-FreeBSD.md

web-server

migrations

env.py

package.jsonversions

0967658d9c0d_add_apikey_account_mapping_table.py0d3d93f1c2e0_add_domain_id_to_history_table.py31a4ed468b18_remove_all_settings_in_the_db.py3f76448bb6de_add_user_confirmed_column.py6ea7dc05f496_fix_typo_in_history_detail.py787bdba9e147_init_db.pyb24bf17725d2_add_unique_index_to_settings_table_keys.pyf41520e41cee_update_domain_type_length.pyfbc7cf864b24_update_history_detail_quotes.py

powerdnsadmin

2

.github/FUNDING.yml

vendored

2

.github/FUNDING.yml

vendored

@ -1 +1 @@

|

||||

github: [ngoduykhanh]

|

||||

github: [AzorianSolutions]

|

||||

7

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

7

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

@ -0,0 +1,7 @@

|

||||

---

|

||||

# Reference: https://help.github.com/en/github/building-a-strong-community/configuring-issue-templates-for-your-repository#configuring-the-template-chooser

|

||||

blank_issues_enabled: false

|

||||

contact_links:

|

||||

- name: 📖 Project Update - PLEASE READ!

|

||||

url: https://github.com/PowerDNS-Admin/PowerDNS-Admin/discussions/1708

|

||||

about: "Important information about the future of this project"

|

||||

14

.github/PULL_REQUEST_TEMPLATE.md

vendored

Normal file

14

.github/PULL_REQUEST_TEMPLATE.md

vendored

Normal file

@ -0,0 +1,14 @@

|

||||

<!--

|

||||

Thank you for your interest in contributing to the PowerDNS Admin project! Please note that our contribution

|

||||

policy requires that a feature request or bug report be approved and assigned prior to opening a pull request.

|

||||

This helps avoid wasted time and effort on a proposed change that we might want to or be able to accept.

|

||||

|

||||

IF YOUR PULL REQUEST DOES NOT REFERENCE AN ISSUE WHICH HAS BEEN ASSIGNED TO YOU, IT WILL BE CLOSED AUTOMATICALLY!

|

||||

|

||||

Please specify your assigned issue number on the line below.

|

||||

-->

|

||||

### Fixes: #1234

|

||||

|

||||

<!--

|

||||

Please include a summary of the proposed changes below.

|

||||

-->

|

||||

15

.github/SUPPORT.md

vendored

Normal file

15

.github/SUPPORT.md

vendored

Normal file

@ -0,0 +1,15 @@

|

||||

# PowerDNS Admin

|

||||

|

||||

## Project Support

|

||||

|

||||

**Looking for help?** PDA has a somewhat active community of fellow users that may be able to provide assistance.

|

||||

Just [start a discussion](https://github.com/PowerDNS-Admin/PowerDNS-Admin/discussions/new) right here on GitHub!

|

||||

|

||||

Looking to chat with someone? Join our [Discord Server](https://discord.powerdnsadmin.org).

|

||||

|

||||

Some general tips for engaging here on GitHub:

|

||||

|

||||

* Register for a free [GitHub account](https://github.com/signup) if you haven't already.

|

||||

* You can use [GitHub Markdown](https://docs.github.com/en/get-started/writing-on-github/getting-started-with-writing-and-formatting-on-github/basic-writing-and-formatting-syntax) for formatting text and adding images.

|

||||

* To help mitigate notification spam, please avoid "bumping" issues with no activity. (To vote an issue up or down, use a :thumbsup: or :thumbsdown: reaction.)

|

||||

* Please avoid pinging members with `@` unless they've previously expressed interest or involvement with that particular issue.

|

||||

23

.github/dependabot.yml

vendored

Normal file

23

.github/dependabot.yml

vendored

Normal file

@ -0,0 +1,23 @@

|

||||

---

|

||||

version: 2

|

||||

updates:

|

||||

- package-ecosystem: npm

|

||||

target-branch: dev

|

||||

directory: /

|

||||

schedule:

|

||||

interval: daily

|

||||

ignore:

|

||||

- dependency-name: "*"

|

||||

update-types: [ "version-update:semver-major" ]

|

||||

labels:

|

||||

- 'feature / dependency'

|

||||

- package-ecosystem: pip

|

||||

target-branch: dev

|

||||

directory: /

|

||||

schedule:

|

||||

interval: daily

|

||||

ignore:

|

||||

- dependency-name: "*"

|

||||

update-types: [ "version-update:semver-major" ]

|

||||

labels:

|

||||

- 'feature / dependency'

|

||||

98

.github/labels.yml

vendored

Normal file

98

.github/labels.yml

vendored

Normal file

@ -0,0 +1,98 @@

|

||||

---

|

||||

labels:

|

||||

- name: bug / broken-feature

|

||||

description: Existing feature malfunctioning or broken

|

||||

color: 'd73a4a'

|

||||

- name: bug / security-vulnerability

|

||||

description: Security vulnerability identified with the application

|

||||

color: 'd73a4a'

|

||||

- name: docs / discussion

|

||||

description: Documentation change proposals

|

||||

color: '0075ca'

|

||||

- name: docs / request

|

||||

description: Documentation change request

|

||||

color: '0075ca'

|

||||

- name: feature / dependency

|

||||

description: Existing feature dependency

|

||||

color: '008672'

|

||||

- name: feature / discussion

|

||||

description: New or existing feature discussion

|

||||

color: '008672'

|

||||

- name: feature / request

|

||||

description: New feature or enhancement request

|

||||

color: '008672'

|

||||

- name: feature / update

|

||||

description: Existing feature modification

|

||||

color: '008672'

|

||||

- name: help / deployment

|

||||

description: Questions regarding application deployment

|

||||

color: 'd876e3'

|

||||

- name: help / features

|

||||

description: Questions regarding the use of application features

|

||||

color: 'd876e3'

|

||||

- name: help / other

|

||||

description: General questions not specific to application deployment or features

|

||||

color: 'd876e3'

|

||||

- name: mod / accepted

|

||||

description: This request has been accepted

|

||||

color: 'e5ef23'

|

||||

- name: mod / announcement

|

||||

description: This is an admin announcement

|

||||

color: 'e5ef23'

|

||||

- name: mod / change-request

|

||||

description: Used by internal developers to indicate a change-request.

|

||||

color: 'e5ef23'

|

||||

- name: mod / changes-requested

|

||||

description: Changes have been requested before proceeding

|

||||

color: 'e5ef23'

|

||||

- name: mod / duplicate

|

||||

description: This issue or pull request already exists

|

||||

color: 'e5ef23'

|

||||

- name: mod / good-first-issue

|

||||

description: Good for newcomers

|

||||

color: 'e5ef23'

|

||||

- name: mod / help-wanted

|

||||

description: Extra attention is needed

|

||||

color: 'e5ef23'

|

||||

- name: mod / invalid

|

||||

description: This doesn't seem right

|

||||

color: 'e5ef23'

|

||||

- name: mod / rejected

|

||||

description: This request has been rejected

|

||||

color: 'e5ef23'

|

||||

- name: mod / reviewed

|

||||

description: This request has been reviewed

|

||||

color: 'e5ef23'

|

||||

- name: mod / reviewing

|

||||

description: This request is being reviewed

|

||||

color: 'e5ef23'

|

||||

- name: mod / stale

|

||||

description: This request has gone stale

|

||||

color: 'e5ef23'

|

||||

- name: mod / tested

|

||||

description: This has been tested

|

||||

color: 'e5ef23'

|

||||

- name: mod / testing

|

||||

description: This is being tested

|

||||

color: 'e5ef23'

|

||||

- name: mod / wont-fix

|

||||

description: This will not be worked on

|

||||

color: 'e5ef23'

|

||||

- name: skill / database

|

||||

description: Requires a database skill-set

|

||||

color: '5319E7'

|

||||

- name: skill / docker

|

||||

description: Requires a Docker skill-set

|

||||

color: '5319E7'

|

||||

- name: skill / documentation

|

||||

description: Requires a documentation skill-set

|

||||

color: '5319E7'

|

||||

- name: skill / html

|

||||

description: Requires a HTML skill-set

|

||||

color: '5319E7'

|

||||

- name: skill / javascript

|

||||

description: Requires a JavaScript skill-set

|

||||

color: '5319E7'

|

||||

- name: skill / python

|

||||

description: Requires a Python skill-set

|

||||

color: '5319E7'

|

||||

19

.github/stale.yml

vendored

19

.github/stale.yml

vendored

@ -1,19 +0,0 @@

|

||||

# Number of days of inactivity before an issue becomes stale

|

||||

daysUntilStale: 60

|

||||

# Number of days of inactivity before a stale issue is closed

|

||||

daysUntilClose: 7

|

||||

# Issues with these labels will never be considered stale

|

||||

exemptLabels:

|

||||

- pinned

|

||||

- security

|

||||

- enhancement

|

||||

- feature request

|

||||

# Label to use when marking an issue as stale

|

||||

staleLabel: wontfix

|

||||

# Comment to post when marking an issue as stale. Set to `false` to disable

|

||||

markComment: >

|

||||

This issue has been automatically marked as stale because it has not had

|

||||

recent activity. It will be closed if no further activity occurs. Thank you

|

||||

for your contributions.

|

||||

# Comment to post when closing a stale issue. Set to `false` to disable

|

||||

closeComment: true

|

||||

81

.github/workflows/build-and-publish.yml

vendored

Normal file

81

.github/workflows/build-and-publish.yml

vendored

Normal file

@ -0,0 +1,81 @@

|

||||

---

|

||||

name: 'Docker Image'

|

||||

|

||||

on:

|

||||

workflow_dispatch:

|

||||

push:

|

||||

branches:

|

||||

- 'dev'

|

||||

- 'master'

|

||||

tags:

|

||||

- 'v*.*.*'

|

||||

paths-ignore:

|

||||

- .github/**

|

||||

- deploy/**

|

||||

- docker-test/**

|

||||

- docs/**

|

||||

- .dockerignore

|

||||

- .gitattributes

|

||||

- .gitignore

|

||||

- .lgtm.yml

|

||||

- .whitesource

|

||||

- .yarnrc

|

||||

- docker-compose.yml

|

||||

- docker-compose-test.yml

|

||||

- LICENSE

|

||||

- README.md

|

||||

- SECURITY.md

|

||||

|

||||

jobs:

|

||||

build-and-push-docker-image:

|

||||

name: Build Docker Image

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

steps:

|

||||

- name: Repository Checkout

|

||||

uses: actions/checkout@v2

|

||||

|

||||

- name: Docker Image Metadata

|

||||

id: meta

|

||||

uses: docker/metadata-action@v3

|

||||

with:

|

||||

images: |

|

||||

powerdnsadmin/pda-legacy

|

||||

tags: |

|

||||

type=ref,event=tag

|

||||

type=semver,pattern={{version}}

|

||||

type=semver,pattern={{major}}.{{minor}}

|

||||

type=semver,pattern={{major}}

|

||||

|

||||

- name: QEMU Setup

|

||||

uses: docker/setup-qemu-action@v2

|

||||

|

||||

- name: Docker Buildx Setup

|

||||

id: buildx

|

||||

uses: docker/setup-buildx-action@v1

|

||||

|

||||

- name: Docker Hub Authentication

|

||||

uses: docker/login-action@v1

|

||||

with:

|

||||

username: ${{ secrets.DOCKERHUB_USERNAME_V2 }}

|

||||

password: ${{ secrets.DOCKERHUB_TOKEN_V2 }}

|

||||

|

||||

- name: Docker Image Build

|

||||

uses: docker/build-push-action@v4

|

||||

with:

|

||||

platforms: linux/amd64,linux/arm64

|

||||

context: ./

|

||||

file: ./docker/Dockerfile

|

||||

push: true

|

||||

tags: powerdnsadmin/pda-legacy:${{ github.ref_name }}

|

||||

|

||||

- name: Docker Image Release Tagging

|

||||

uses: docker/build-push-action@v4

|

||||

if: ${{ startsWith(github.ref, 'refs/tags/v') }}

|

||||

with:

|

||||

platforms: linux/amd64,linux/arm64

|

||||

context: ./

|

||||

file: ./docker/Dockerfile

|

||||

push: true

|

||||

tags: ${{ steps.meta.outputs.tags }}

|

||||

labels: ${{ steps.meta.outputs.labels }}

|

||||

134

.github/workflows/codeql-analysis.yml

vendored

Normal file

134

.github/workflows/codeql-analysis.yml

vendored

Normal file

@ -0,0 +1,134 @@

|

||||

---

|

||||

# For most projects, this workflow file will not need changing; you simply need

|

||||

# to commit it to your repository.

|

||||

#

|

||||

# You may wish to alter this file to override the set of languages analyzed,

|

||||

# or to provide custom queries or build logic.

|

||||

#

|

||||

# ******** NOTE ********

|

||||

# We have attempted to detect the languages in your repository. Please check

|

||||

# the `language` matrix defined below to confirm you have the correct set of

|

||||

# supported CodeQL languages.

|

||||

#

|

||||

name: "CodeQL"

|

||||

|

||||

on:

|

||||

workflow_dispatch:

|

||||

push:

|

||||

branches:

|

||||

- 'dev'

|

||||

- 'main'

|

||||

- 'master'

|

||||

- 'dependabot/**'

|

||||

- 'feature/**'

|

||||

- 'issue/**'

|

||||

paths-ignore:

|

||||

- .github/**

|

||||

- deploy/**

|

||||

- docker/**

|

||||

- docker-test/**

|

||||

- docs/**

|

||||

- powerdnsadmin/static/assets/**

|

||||

- powerdnsadmin/static/custom/css/**

|

||||

- powerdnsadmin/static/img/**

|

||||

- powerdnsadmin/swagger-spec.yaml

|

||||

- .dockerignore

|

||||

- .gitattributes

|

||||

- .gitignore

|

||||

- .lgtm.yml

|

||||

- .whitesource

|

||||

- .yarnrc

|

||||

- docker-compose.yml

|

||||

- docker-compose-test.yml

|

||||

- LICENSE

|

||||

- package.json

|

||||

- README.md

|

||||

- requirements.txt

|

||||

- SECURITY.md

|

||||

- yarn.lock

|

||||

pull_request:

|

||||

# The branches below must be a subset of the branches above

|

||||

branches:

|

||||

- 'dev'

|

||||

- 'main'

|

||||

- 'master'

|

||||

- 'dependabot/**'

|

||||

- 'feature/**'

|

||||

- 'issue/**'

|

||||

paths-ignore:

|

||||

- .github/**

|

||||

- deploy/**

|

||||

- docker/**

|

||||

- docker-test/**

|

||||

- docs/**

|

||||

- powerdnsadmin/static/assets/**

|

||||

- powerdnsadmin/static/custom/css/**

|

||||

- powerdnsadmin/static/img/**

|

||||

- powerdnsadmin/swagger-spec.yaml

|

||||

- .dockerignore

|

||||

- .gitattributes

|

||||

- .gitignore

|

||||

- .lgtm.yml

|

||||

- .whitesource

|

||||

- .yarnrc

|

||||

- docker-compose.yml

|

||||

- docker-compose-test.yml

|

||||

- LICENSE

|

||||

- package.json

|

||||

- README.md

|

||||

- requirements.txt

|

||||

- SECURITY.md

|

||||

- yarn.lock

|

||||

schedule:

|

||||

- cron: '45 2 * * 2'

|

||||

|

||||

jobs:

|

||||

analyze:

|

||||

name: Analyze

|

||||

runs-on: ubuntu-latest

|

||||

permissions:

|

||||

actions: read

|

||||

contents: read

|

||||

security-events: write

|

||||

|

||||

strategy:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

language: [ 'javascript', 'python' ]

|

||||

# CodeQL supports [ 'cpp', 'csharp', 'go', 'java', 'javascript', 'python', 'ruby' ]

|

||||

# Learn more about CodeQL language support at https://aka.ms/codeql-docs/language-support

|

||||

|

||||

steps:

|

||||

- name: Checkout repository

|

||||

uses: actions/checkout@v3

|

||||

|

||||

# Initializes the CodeQL tools for scanning.

|

||||

- name: Initialize CodeQL

|

||||

uses: github/codeql-action/init@v2

|

||||

with:

|

||||

languages: ${{ matrix.language }}

|

||||

# If you wish to specify custom queries, you can do so here or in a config file.

|

||||

# By default, queries listed here will override any specified in a config file.

|

||||

# Prefix the list here with "+" to use these queries and those in the config file.

|

||||

|

||||

# Details on CodeQL's query packs refer to : https://docs.github.com/en/code-security/code-scanning/automatically-scanning-your-code-for-vulnerabilities-and-errors/configuring-code-scanning#using-queries-in-ql-packs

|

||||

# queries: security-extended,security-and-quality

|

||||

|

||||

|

||||

# Autobuild attempts to build any compiled languages (C/C++, C#, or Java).

|

||||

# If this step fails, then you should remove it and run the build manually (see below)

|

||||

- name: Autobuild

|

||||

uses: github/codeql-action/autobuild@v2

|

||||

|

||||

# ℹ️ Command-line programs to run using the OS shell.

|

||||

# 📚 See https://docs.github.com/en/actions/using-workflows/workflow-syntax-for-github-actions#jobsjob_idstepsrun

|

||||

|

||||

# If the Autobuild fails above, remove it and uncomment the following three lines.

|

||||

# modify them (or add more) to build your code if your project, please refer to the EXAMPLE below for guidance.

|

||||

|

||||

# - run: |

|

||||

# echo "Run, Build Application using script"

|

||||

# ./location_of_script_within_repo/buildscript.sh

|

||||

|

||||

- name: Perform CodeQL Analysis

|

||||

uses: github/codeql-action/analyze@v2

|

||||

24

.github/workflows/lock.yml

vendored

Normal file

24

.github/workflows/lock.yml

vendored

Normal file

@ -0,0 +1,24 @@

|

||||

---

|

||||

# lock-threads (https://github.com/marketplace/actions/lock-threads)

|

||||

name: 'Lock threads'

|

||||

|

||||

on:

|

||||

schedule:

|

||||

- cron: '0 3 * * *'

|

||||

workflow_dispatch:

|

||||

|

||||

permissions:

|

||||

issues: write

|

||||

pull-requests: write

|

||||

|

||||

jobs:

|

||||

lock:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: dessant/lock-threads@v3

|

||||

with:

|

||||

issue-inactive-days: 90

|

||||

pr-inactive-days: 30

|

||||

issue-lock-reason: 'resolved'

|

||||

exclude-any-issue-labels: 'bug / security-vulnerability, mod / announcement, mod / accepted, mod / reviewing, mod / testing'

|

||||

exclude-any-pr-labels: 'bug / security-vulnerability, mod / announcement, mod / accepted, mod / reviewing, mod / testing'

|

||||

92

.github/workflows/mega-linter.yml

vendored

Normal file

92

.github/workflows/mega-linter.yml

vendored

Normal file

@ -0,0 +1,92 @@

|

||||

---

|

||||

# MegaLinter GitHub Action configuration file

|

||||

# More info at https://megalinter.io

|

||||

name: MegaLinter

|

||||

|

||||

on:

|

||||

workflow_dispatch:

|

||||

push:

|

||||

branches-ignore:

|

||||

- "*"

|

||||

- "dev"

|

||||

- "main"

|

||||

- "master"

|

||||

- "dependabot/**"

|

||||

- "feature/**"

|

||||

- "issues/**"

|

||||

- "release/**"

|

||||

|

||||

env: # Comment env block if you do not want to apply fixes

|

||||

# Apply linter fixes configuration

|

||||

APPLY_FIXES: all # When active, APPLY_FIXES must also be defined as environment variable (in github/workflows/mega-linter.yml or other CI tool)

|

||||

APPLY_FIXES_EVENT: all # Decide which event triggers application of fixes in a commit or a PR (pull_request, push, all)

|

||||

APPLY_FIXES_MODE: pull_request # If APPLY_FIXES is used, defines if the fixes are directly committed (commit) or posted in a PR (pull_request)

|

||||

|

||||

concurrency:

|

||||

group: ${{ github.ref }}-${{ github.workflow }}

|

||||

cancel-in-progress: true

|

||||

|

||||

jobs:

|

||||

build:

|

||||

name: MegaLinter

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

# Git Checkout

|

||||

- name: Checkout Code

|

||||

uses: actions/checkout@v3

|

||||

with:

|

||||

token: ${{ secrets.PAT || secrets.GITHUB_TOKEN }}

|

||||

|

||||

# MegaLinter

|

||||

- name: MegaLinter

|

||||

id: ml

|

||||

# You can override MegaLinter flavor used to have faster performances

|

||||

# More info at https://megalinter.io/flavors/

|

||||

uses: oxsecurity/megalinter@v6

|

||||

env:

|

||||

# All available variables are described in documentation

|

||||

# https://megalinter.io/configuration/

|

||||

VALIDATE_ALL_CODEBASE: true # Validates all source when push on main, else just the git diff with main. Override with true if you always want to lint all sources

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

PAT: ${{ secrets.PAT }}

|

||||

# ADD YOUR CUSTOM ENV VARIABLES HERE OR DEFINE THEM IN A FILE .mega-linter.yml AT THE ROOT OF YOUR REPOSITORY

|

||||

# DISABLE: COPYPASTE,SPELL # Uncomment to disable copy-paste and spell checks

|

||||

|

||||

# Upload MegaLinter artifacts

|

||||

- name: Archive production artifacts

|

||||

if: ${{ success() }} || ${{ failure() }}

|

||||

uses: actions/upload-artifact@v3

|

||||

with:

|

||||

name: MegaLinter reports

|

||||

path: |

|

||||

megalinter-reports

|

||||

mega-linter.log

|

||||

|

||||

# Create pull request if applicable (for now works only on PR from same repository, not from forks)

|

||||

- name: Create PR with applied fixes

|

||||

id: cpr

|

||||

if: steps.ml.outputs.has_updated_sources == 1 && (env.APPLY_FIXES_EVENT == 'all' || env.APPLY_FIXES_EVENT == github.event_name) && env.APPLY_FIXES_MODE == 'pull_request' && (github.event_name == 'push' || github.event.pull_request.head.repo.full_name == github.repository)

|

||||

uses: peter-evans/create-pull-request@v4

|

||||

with:

|

||||

token: ${{ secrets.PAT || secrets.GITHUB_TOKEN }}

|

||||

commit-message: "[MegaLinter] Apply linters automatic fixes"

|

||||

title: "[MegaLinter] Apply linters automatic fixes"

|

||||

labels: bot

|

||||

|

||||

- name: Create PR output

|

||||

if: steps.ml.outputs.has_updated_sources == 1 && (env.APPLY_FIXES_EVENT == 'all' || env.APPLY_FIXES_EVENT == github.event_name) && env.APPLY_FIXES_MODE == 'pull_request' && (github.event_name == 'push' || github.event.pull_request.head.repo.full_name == github.repository)

|

||||

run: |

|

||||

echo "Pull Request Number - ${{ steps.cpr.outputs.pull-request-number }}"

|

||||

echo "Pull Request URL - ${{ steps.cpr.outputs.pull-request-url }}"

|

||||

|

||||

# Push new commit if applicable (for now works only on PR from same repository, not from forks)

|

||||

- name: Prepare commit

|

||||

if: steps.ml.outputs.has_updated_sources == 1 && (env.APPLY_FIXES_EVENT == 'all' || env.APPLY_FIXES_EVENT == github.event_name) && env.APPLY_FIXES_MODE == 'commit' && github.ref != 'refs/heads/main' && (github.event_name == 'push' || github.event.pull_request.head.repo.full_name == github.repository)

|

||||

run: sudo chown -Rc $UID .git/

|

||||

- name: Commit and push applied linter fixes

|

||||

if: steps.ml.outputs.has_updated_sources == 1 && (env.APPLY_FIXES_EVENT == 'all' || env.APPLY_FIXES_EVENT == github.event_name) && env.APPLY_FIXES_MODE == 'commit' && github.ref != 'refs/heads/main' && (github.event_name == 'push' || github.event.pull_request.head.repo.full_name == github.repository)

|

||||

uses: stefanzweifel/git-auto-commit-action@v4

|

||||

with:

|

||||

branch: ${{ github.event.pull_request.head.ref || github.head_ref || github.ref }}

|

||||

commit_message: "[MegaLinter] Apply linters fixes"

|

||||

|

||||

46

.github/workflows/stale.yml

vendored

Normal file

46

.github/workflows/stale.yml

vendored

Normal file

@ -0,0 +1,46 @@

|

||||

# close-stale-issues (https://github.com/marketplace/actions/close-stale-issues)

|

||||

name: 'Close Stale Threads'

|

||||

|

||||

on:

|

||||

schedule:

|

||||

- cron: '0 4 * * *'

|

||||

workflow_dispatch:

|

||||

|

||||

permissions:

|

||||

issues: write

|

||||

pull-requests: write

|

||||

|

||||

jobs:

|

||||

stale:

|

||||

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/stale@v6

|

||||

with:

|

||||

close-issue-message: >

|

||||

This issue has been automatically closed due to lack of activity. In an

|

||||

effort to reduce noise, please do not comment any further. Note that the

|

||||

core maintainers may elect to reopen this issue at a later date if deemed

|

||||

necessary.

|

||||

close-pr-message: >

|

||||

This PR has been automatically closed due to lack of activity.

|

||||

days-before-stale: 90

|

||||

days-before-close: 30

|

||||

exempt-issue-labels: 'bug / security-vulnerability, mod / announcement, mod / accepted, mod / reviewing, mod / testing'

|

||||

operations-per-run: 100

|

||||

remove-stale-when-updated: false

|

||||

stale-issue-label: 'mod / stale'

|

||||

stale-issue-message: >

|

||||

This issue has been automatically marked as stale because it has not had

|

||||

recent activity. It will be closed if no further activity occurs. PDA

|

||||

is governed by a small group of core maintainers which means not all opened

|

||||

issues may receive direct feedback. **Do not** attempt to circumvent this

|

||||

process by "bumping" the issue; doing so will result in its immediate closure

|

||||

and you may be barred from participating in any future discussions. Please see our

|

||||

[Contribution Guide](https://github.com/PowerDNS-Admin/PowerDNS-Admin/blob/master/docs/CONTRIBUTING.md).

|

||||

stale-pr-label: 'mod / stale'

|

||||

stale-pr-message: >

|

||||

This PR has been automatically marked as stale because it has not had

|

||||

recent activity. It will be closed automatically if no further action is

|

||||

taken. Please see our

|

||||

[Contribution Guide](https://github.com/PowerDNS-Admin/PowerDNS-Admin/blob/master/docs/CONTRIBUTING.md).

|

||||

4

.gitignore

vendored

4

.gitignore

vendored

@ -1,3 +1,5 @@

|

||||

flask_session

|

||||

|

||||

# gedit

|

||||

*~

|

||||

|

||||

@ -38,5 +40,7 @@ node_modules

|

||||

powerdnsadmin/static/generated

|

||||

.webassets-cache

|

||||

.venv*

|

||||

venv*

|

||||

.pytest_cache

|

||||

.DS_Store

|

||||

yarn-error.log

|

||||

|

||||

@ -1,5 +0,0 @@

|

||||

language: minimal

|

||||

script:

|

||||

- docker-compose -f docker-compose-test.yml up --exit-code-from powerdns-admin --abort-on-container-exit

|

||||

services:

|

||||

- docker

|

||||

1

LICENSE

1

LICENSE

@ -1,6 +1,7 @@

|

||||

The MIT License (MIT)

|

||||

|

||||

Copyright (c) 2016 Khanh Ngo - ngokhanhit[at]gmail.com

|

||||

Copyright (c) 2022 Azorian Solutions - legal[at]azorian.solutions

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

|

||||

94

README.md

94

README.md

@ -1,47 +1,67 @@

|

||||

# PowerDNS-Admin

|

||||

|

||||

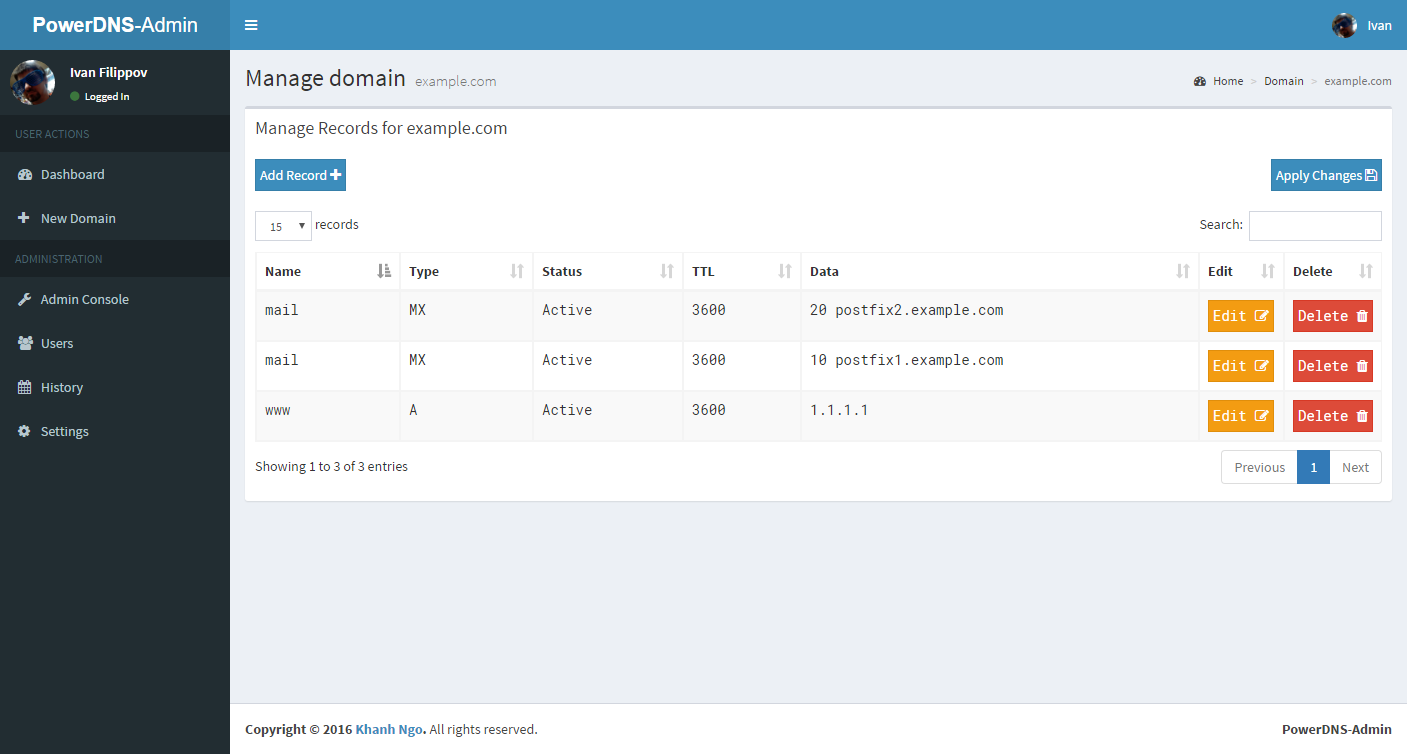

A PowerDNS web interface with advanced features.

|

||||

|

||||

[](https://travis-ci.org/ngoduykhanh/PowerDNS-Admin)

|

||||

[](https://lgtm.com/projects/g/ngoduykhanh/PowerDNS-Admin/context:python)

|

||||

[](https://lgtm.com/projects/g/ngoduykhanh/PowerDNS-Admin/context:javascript)

|

||||

[](https://github.com/PowerDNS-Admin/PowerDNS-Admin/actions/workflows/codeql-analysis.yml)

|

||||

[](https://github.com/PowerDNS-Admin/PowerDNS-Admin/actions/workflows/build-and-publish.yml)

|

||||

|

||||

#### Features:

|

||||

- Multiple domain management

|

||||

- Domain template

|

||||

- User management

|

||||

- User access management based on domain

|

||||

- User activity logging

|

||||

- Support Local DB / SAML / LDAP / Active Directory user authentication

|

||||

- Support Google / Github / Azure / OpenID OAuth

|

||||

- Support Two-factor authentication (TOTP)

|

||||

- Dashboard and pdns service statistics

|

||||

|

||||

- Provides forward and reverse zone management

|

||||

- Provides zone templating features

|

||||

- Provides user management with role based access control

|

||||

- Provides zone specific access control

|

||||

- Provides activity logging

|

||||

- Authentication:

|

||||

- Local User Support

|

||||

- SAML Support

|

||||

- LDAP Support: OpenLDAP / Active Directory

|

||||

- OAuth Support: Google / GitHub / Azure / OpenID

|

||||

- Two-factor authentication support (TOTP)

|

||||

- PDNS Service Configuration & Statistics Monitoring

|

||||

- DynDNS 2 protocol support

|

||||

- Edit IPv6 PTRs using IPv6 addresses directly (no more editing of literal addresses!)

|

||||

- Limited API for manipulating zones and records

|

||||

- Easy IPv6 PTR record editing

|

||||

- Provides an API for zone and record management among other features

|

||||

- Provides full IDN/Punycode support

|

||||

|

||||

## [Project Update - PLEASE READ!!!](https://github.com/PowerDNS-Admin/PowerDNS-Admin/discussions/1708)

|

||||

|

||||

## Running PowerDNS-Admin

|

||||

There are several ways to run PowerDNS-Admin. The easiest way is to use Docker.

|

||||

If you are looking to install and run PowerDNS-Admin directly onto your system check out the [Wiki](https://github.com/ngoduykhanh/PowerDNS-Admin/wiki#installation-guides) for ways to do that.

|

||||

|

||||

There are several ways to run PowerDNS-Admin. The quickest way is to use Docker.

|

||||

If you are looking to install and run PowerDNS-Admin directly onto your system, check out

|

||||

the [wiki](https://github.com/PowerDNS-Admin/PowerDNS-Admin/blob/master/docs/wiki/) for ways to do that.

|

||||

|

||||

### Docker

|

||||

This are two options to run PowerDNS-Admin using Docker.

|

||||

To get started as quickly as possible try option 1. If you want to make modifications to the configuration option 2 may be cleaner.

|

||||

|

||||

Here are two options to run PowerDNS-Admin using Docker.

|

||||

To get started as quickly as possible, try option 1. If you want to make modifications to the configuration option 2 may

|

||||

be cleaner.

|

||||

|

||||

#### Option 1: From Docker Hub

|

||||

The easiest is to just run the latest Docker image from Docker Hub:

|

||||

|

||||

To run the application using the latest stable release on Docker Hub, run the following command:

|

||||

|

||||

```

|

||||

$ docker run -d \

|

||||

-e SECRET_KEY='a-very-secret-key' \

|

||||

-v pda-data:/data \

|

||||

-p 9191:80 \

|

||||

ngoduykhanh/powerdns-admin:latest

|

||||

powerdnsadmin/pda-legacy:latest

|

||||

```

|

||||

This creates a volume called `pda-data` to persist the SQLite database with the configuration.

|

||||

|

||||

This creates a volume named `pda-data` to persist the default SQLite database with app configuration.

|

||||

|

||||

#### Option 2: Using docker-compose

|

||||

1. Update the configuration

|

||||

|

||||

1. Update the configuration

|

||||

Edit the `docker-compose.yml` file to update the database connection string in `SQLALCHEMY_DATABASE_URI`.

|

||||

Other environment variables are mentioned in the [legal_envvars](https://github.com/ngoduykhanh/PowerDNS-Admin/blob/master/configs/docker_config.py#L5-L46).

|

||||

To use the Docker secrets feature it is possible to append `_FILE` to the environment variables and point to a file with the values stored in it.

|

||||

Other environment variables are mentioned in

|

||||

the [AppSettings.defaults](https://github.com/PowerDNS-Admin/PowerDNS-Admin/blob/master/powerdnsadmin/lib/settings.py) dictionary.

|

||||

To use a Docker-style secrets convention, one may append `_FILE` to the environment variables with a path to a file

|

||||

containing the intended value of the variable (e.g. `SQLALCHEMY_DATABASE_URI_FILE=/run/secrets/db_uri`).

|

||||

Make sure to set the environment variable `SECRET_KEY` to a long, random

|

||||

string (https://flask.palletsprojects.com/en/1.1.x/config/#SECRET_KEY)

|

||||

|

||||

2. Start docker container

|

||||

```

|

||||

@ -51,12 +71,28 @@ This creates a volume called `pda-data` to persist the SQLite database with the

|

||||

You can then access PowerDNS-Admin by pointing your browser to http://localhost:9191.

|

||||

|

||||

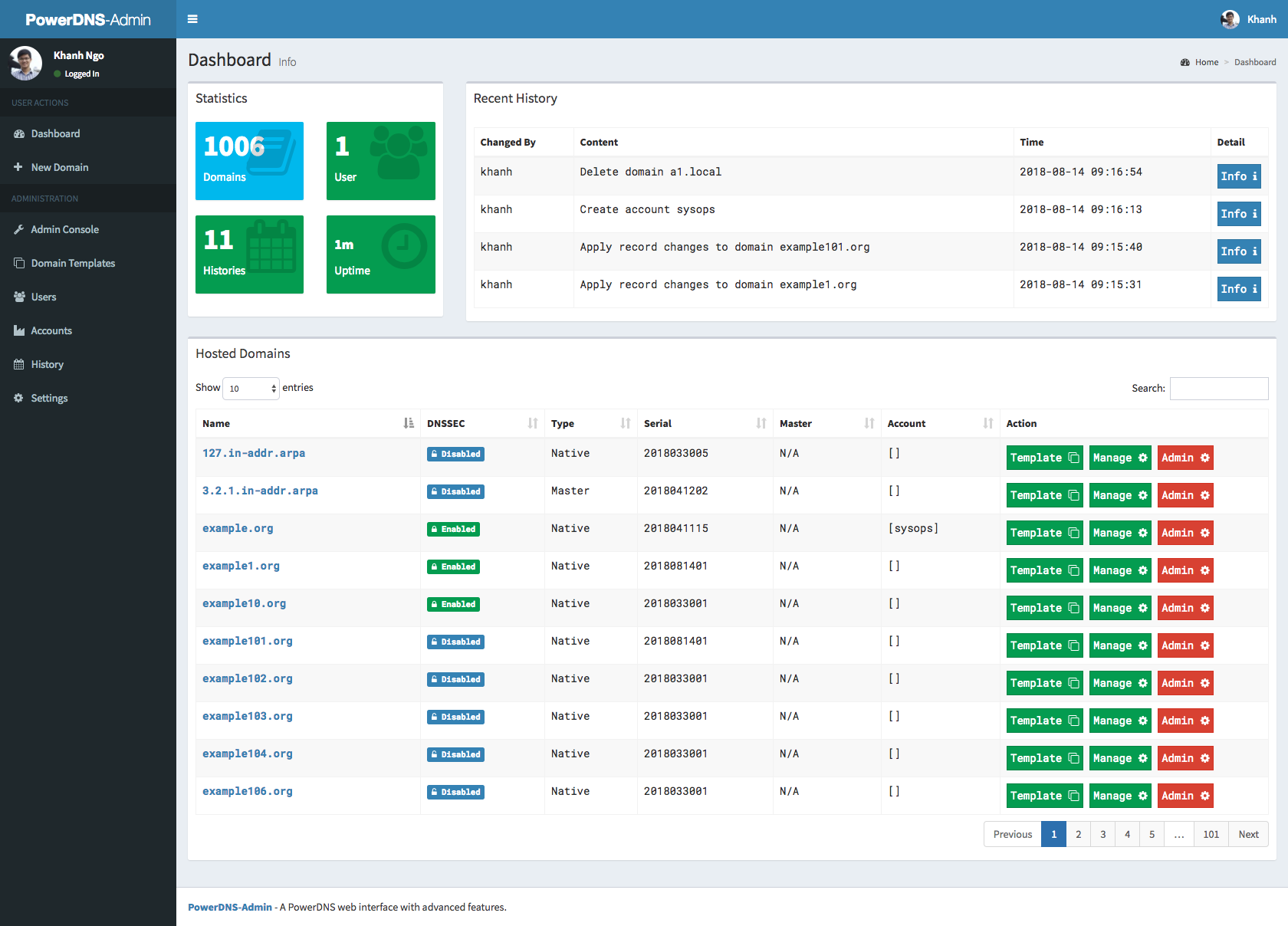

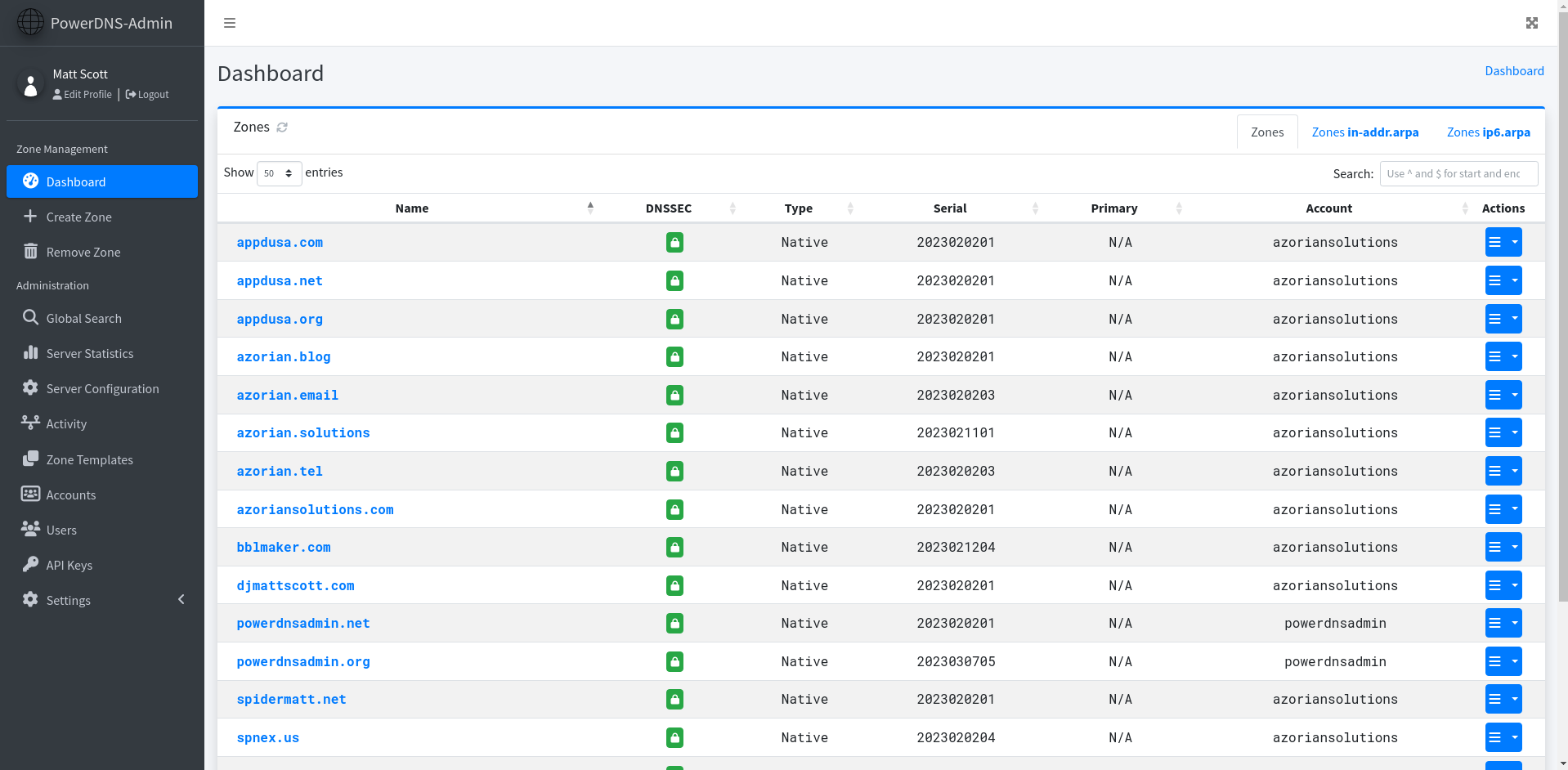

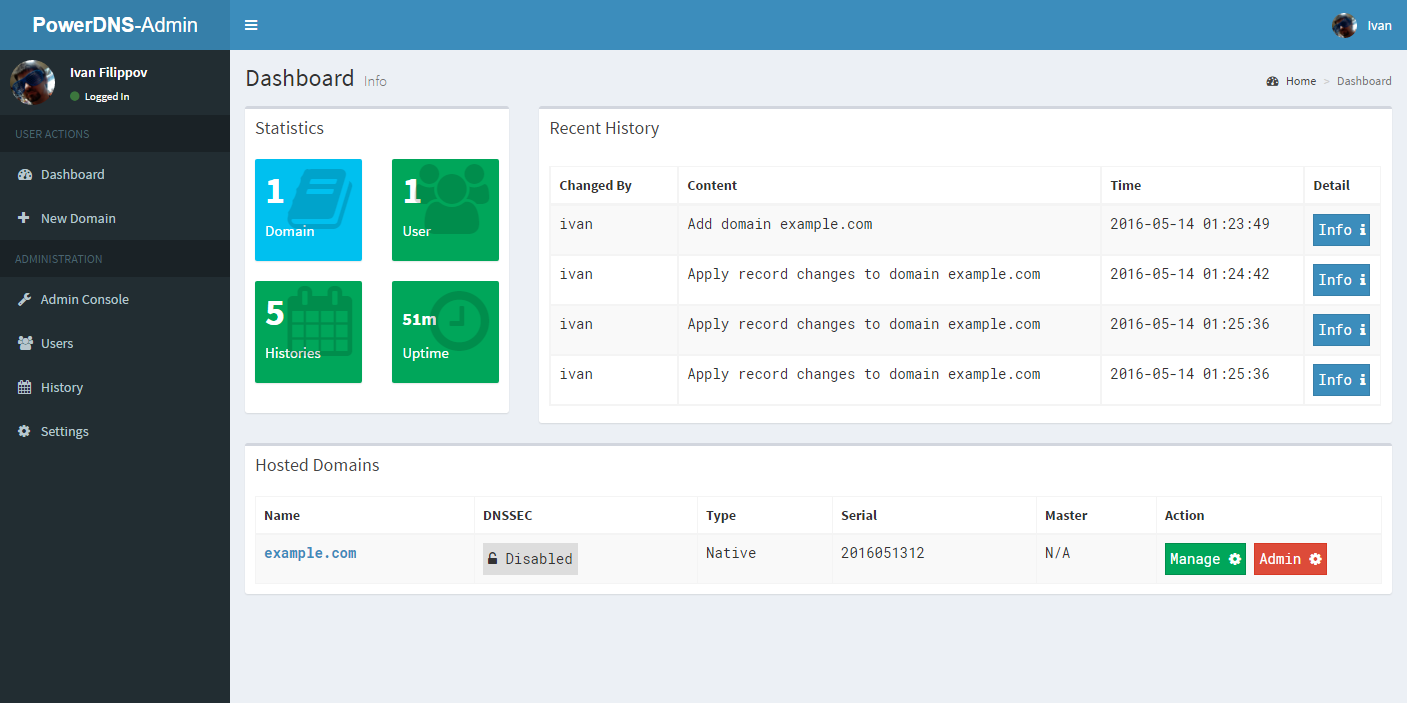

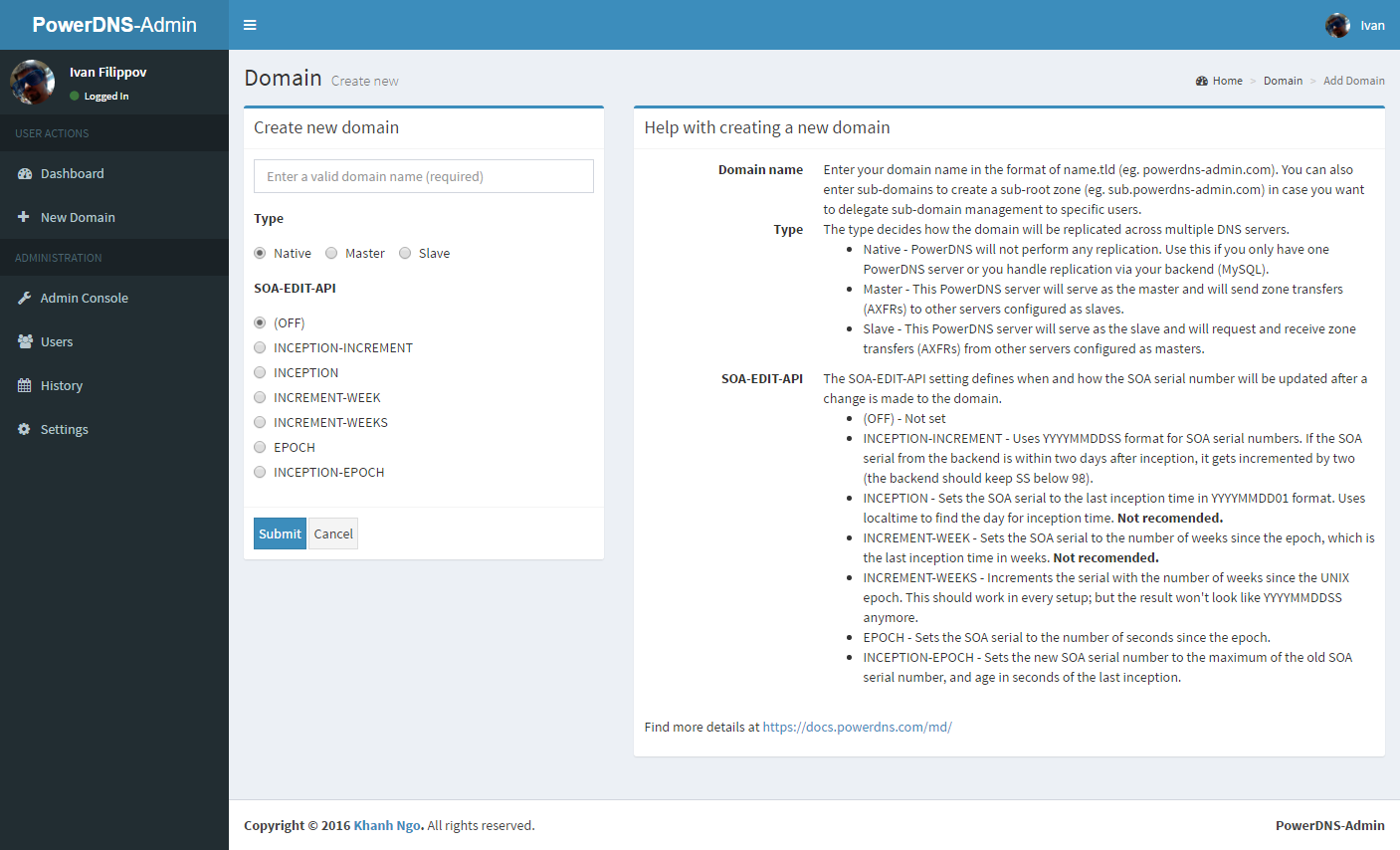

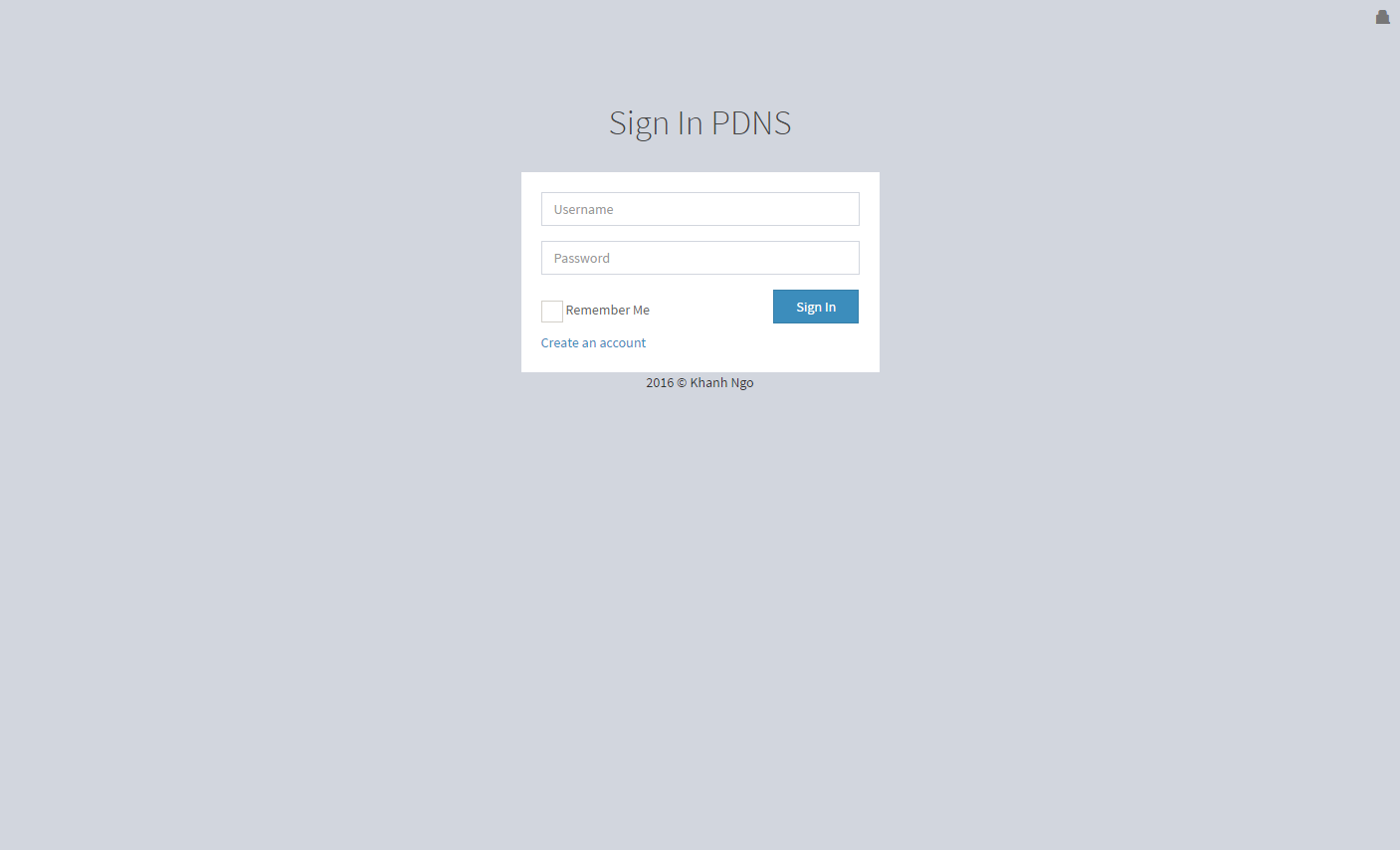

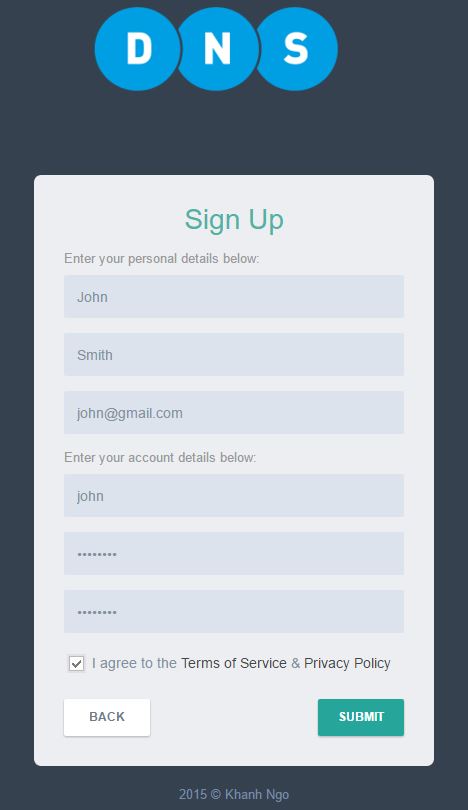

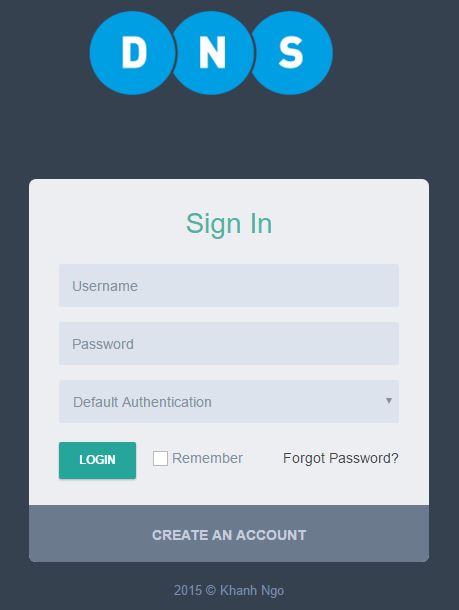

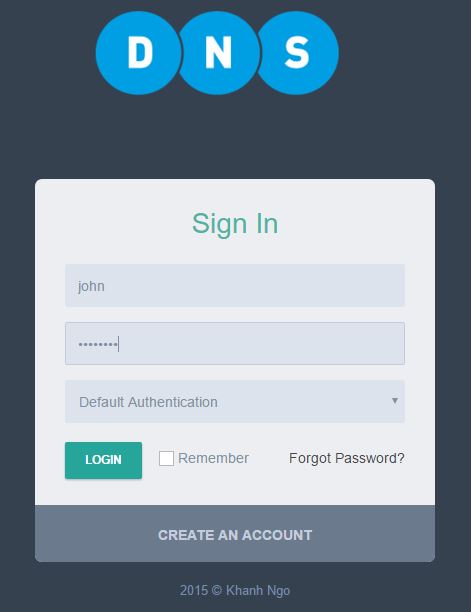

## Screenshots

|

||||

|

||||

|

||||

## LICENSE

|

||||

MIT. See [LICENSE](https://github.com/ngoduykhanh/PowerDNS-Admin/blob/master/LICENSE)

|

||||

|

||||

|

||||

## Support

|

||||

If you like the project and want to support it, you can *buy me a coffee* ☕

|

||||

|

||||

<a href="https://www.buymeacoffee.com/khanhngo" target="_blank"><img src="https://cdn.buymeacoffee.com/buttons/default-orange.png" alt="Buy Me A Coffee" height="41" width="174"></a>

|

||||

**Looking for help?** Try taking a look at the project's

|

||||

[Support Guide](https://github.com/PowerDNS-Admin/PowerDNS-Admin/blob/master/.github/SUPPORT.md) or joining

|

||||

our [Discord Server](https://discord.powerdnsadmin.org).

|

||||

|

||||

## Security Policy

|

||||

|

||||

Please see our [Security Policy](https://github.com/PowerDNS-Admin/PowerDNS-Admin/blob/master/SECURITY.md).

|

||||

|

||||

## Contributing

|

||||

|

||||

Please see our [Contribution Guide](https://github.com/PowerDNS-Admin/PowerDNS-Admin/blob/master/docs/CONTRIBUTING.md).

|

||||

|

||||

## Code of Conduct

|

||||

|

||||

Please see our [Code of Conduct Policy](https://github.com/PowerDNS-Admin/PowerDNS-Admin/blob/master/docs/CODE_OF_CONDUCT.md).

|

||||

|

||||

## License

|

||||

|

||||

This project is released under the MIT license. For additional

|

||||

information, [see the full license](https://github.com/PowerDNS-Admin/PowerDNS-Admin/blob/master/LICENSE).

|

||||

|

||||

31

SECURITY.md

Normal file

31

SECURITY.md

Normal file

@ -0,0 +1,31 @@

|

||||

# Security Policy

|

||||

|

||||

## No Warranty

|

||||

|

||||

Per the terms of the MIT license, PDA is offered "as is" and without any guarantee or warranty pertaining to its operation. While every reasonable effort is made by its maintainers to ensure the product remains free of security vulnerabilities, users are ultimately responsible for conducting their own evaluations of each software release.

|

||||

|

||||

## Recommendations

|

||||

|

||||

Administrators are encouraged to adhere to industry best practices concerning the secure operation of software, such as:

|

||||

|

||||

* Do not expose your PDA installation to the public Internet

|

||||

* Do not permit multiple users to share an account

|

||||

* Enforce minimum password complexity requirements for local accounts

|

||||

* Prohibit access to your database from clients other than the PDA application

|

||||

* Keep your deployment updated to the most recent stable release

|

||||